Asynchronous Coagent Networks: Stochastic Networks for Reinforcement Learning without Backpropagation or a Clock

Paper and Code

Feb 21, 2019

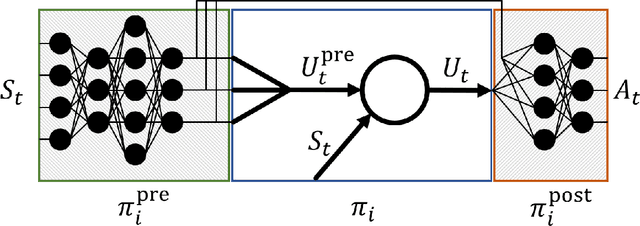

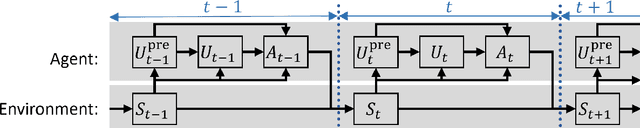

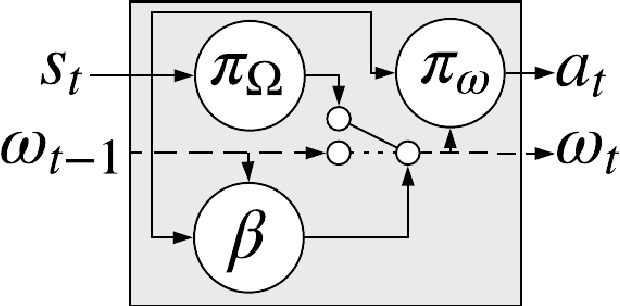

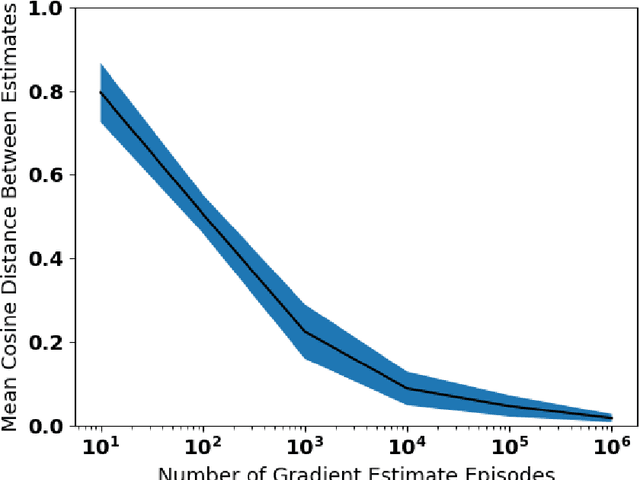

In this paper we introduce a reinforcement learning (RL) approach for training policies, including artificial neural network policies, that is both backpropagation-free and clock-free. It is backpropagation-free in that it does not propagate any information backwards through the network. It is clock-free in that no signal is given to each node in the network to specify when it should compute its output and when it should update its weights. We contend that these two properties increase the biological plausibility of our algorithms and facilitate distributed implementations. Additionally, our approach eliminates the need for customized learning rules for hierarchical RL algorithms like the option-critic.

* Removed LaTeX commands from metadata abstract. Corrected typo in

section 4. Changed Title

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge