Asymptotic Statistical Analysis of $f$-divergence GAN

Paper and Code

Sep 14, 2022

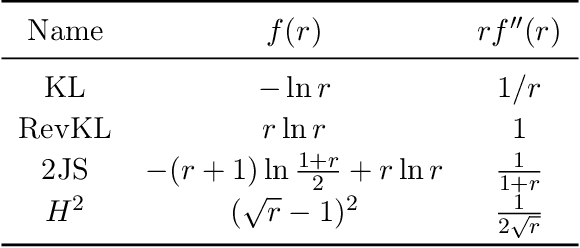

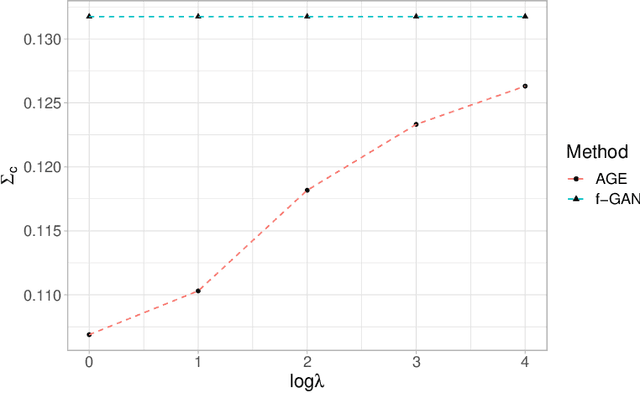

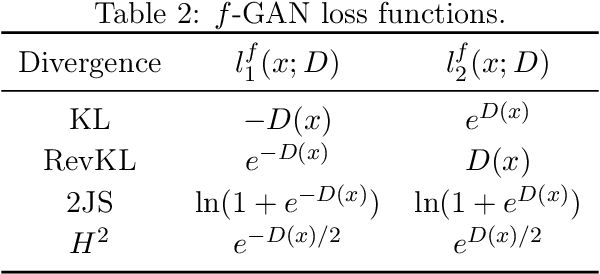

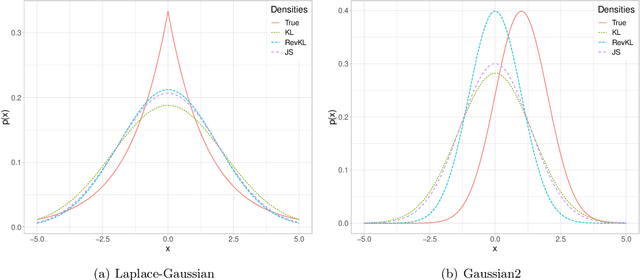

Generative Adversarial Networks (GANs) have achieved great success in data generation. However, its statistical properties are not fully understood. In this paper, we consider the statistical behavior of the general $f$-divergence formulation of GAN, which includes the Kullback--Leibler divergence that is closely related to the maximum likelihood principle. We show that for parametric generative models that are correctly specified, all $f$-divergence GANs with the same discriminator classes are asymptotically equivalent under suitable regularity conditions. Moreover, with an appropriately chosen local discriminator, they become equivalent to the maximum likelihood estimate asymptotically. For generative models that are misspecified, GANs with different $f$-divergences {converge to different estimators}, and thus cannot be directly compared. However, it is shown that for some commonly used $f$-divergences, the original $f$-GAN is not optimal in that one can achieve a smaller asymptotic variance when the discriminator training in the original $f$-GAN formulation is replaced by logistic regression. The resulting estimation method is referred to as Adversarial Gradient Estimation (AGE). Empirical studies are provided to support the theory and to demonstrate the advantage of AGE over the original $f$-GANs under model misspecification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge