Asking for Help: Failure Prediction in Behavioral Cloning through Value Approximation

Paper and Code

Feb 08, 2023

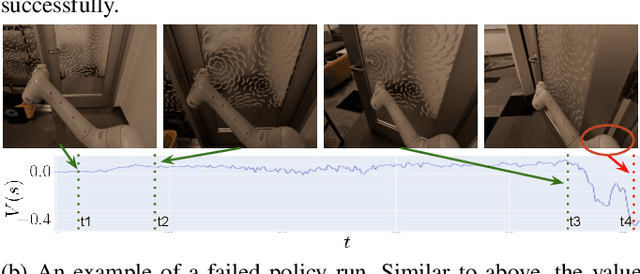

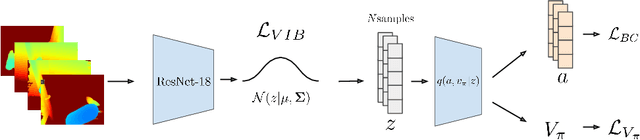

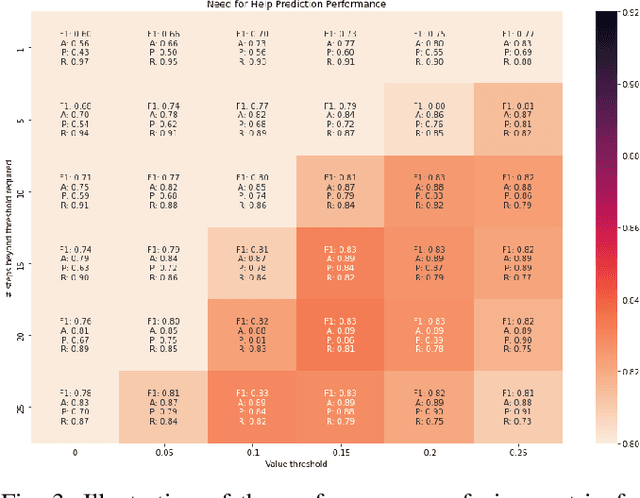

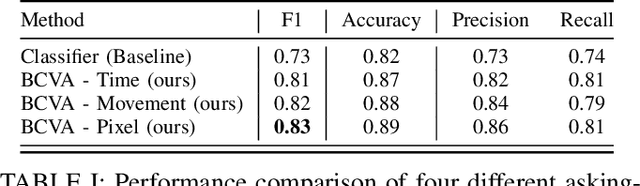

Recent progress in end-to-end Imitation Learning approaches has shown promising results and generalization capabilities on mobile manipulation tasks. Such models are seeing increasing deployment in real-world settings, where scaling up requires robots to be able to operate with high autonomy, i.e. requiring as little human supervision as possible. In order to avoid the need for one-on-one human supervision, robots need to be able to detect and prevent policy failures ahead of time, and ask for help, allowing a remote operator to supervise multiple robots and help when needed. However, the black-box nature of end-to-end Imitation Learning models such as Behavioral Cloning, as well as the lack of an explicit state-value representation, make it difficult to predict failures. To this end, we introduce Behavioral Cloning Value Approximation (BCVA), an approach to learning a state value function based on and trained jointly with a Behavioral Cloning policy that can be used to predict failures. We demonstrate the effectiveness of BCVA by applying it to the challenging mobile manipulation task of latched-door opening, showing that we can identify failure scenarios with with 86% precision and 81% recall, evaluated on over 2000 real world runs, improving upon the baseline of simple failure classification by 10 percentage-points.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge