Arithmetic addition of two integers by deep image classification networks: experiments to quantify their autonomous reasoning ability

Paper and Code

Dec 10, 2019

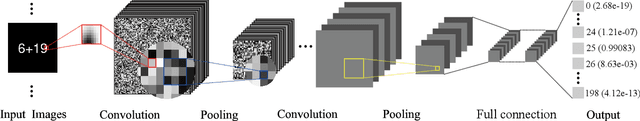

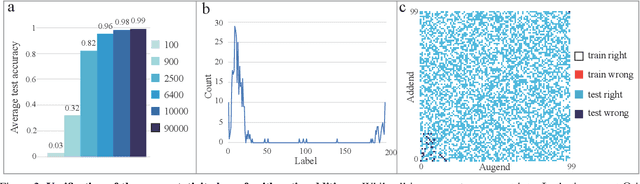

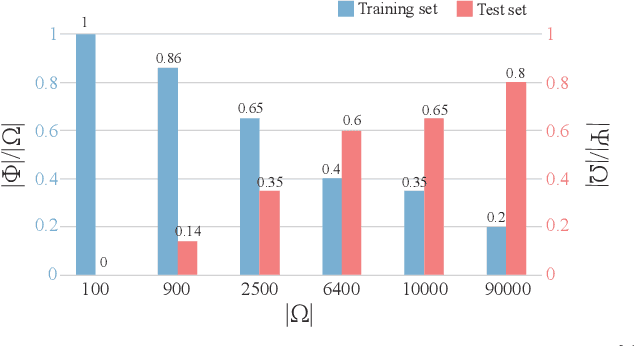

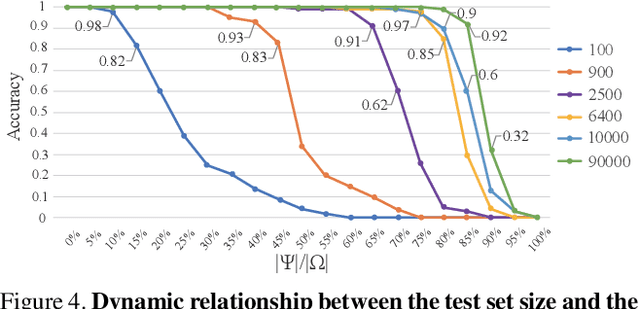

The unprecedented performance achieved by deep convolutional neural networks for image classification is linked primarily to their ability of capturing rich structural features at various layers within networks. Here we design a series of experiments, inspired by children's learning of the arithmetic addition of two integers, to showcase that such deep networks can go beyond the structural features to learn deeper knowledge. In our experiments, a set of images is constructed, each image containing an arithmetic addition $n+m$ in its central area, and several classification networks are then trained over a subset of images, using the sum as the label. Tests on the excluded images show that, as the image set gets larger, the networks have well learnt the law of arithmetic additions so as to build up their autonomous reasoning ability strongly. For instance, networks trained over a small percentage of images can classify a big majority of the remaining images correctly, and many arithmetic additions involving some integers that have never been seen during the training can also be solved correctly by the trained networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge