ARIANN: Low-Interaction Privacy-Preserving Deep Learning via Function Secret Sharing

Paper and Code

Jun 08, 2020

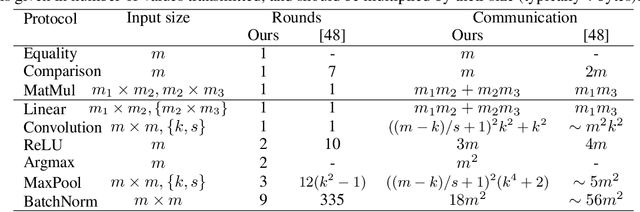

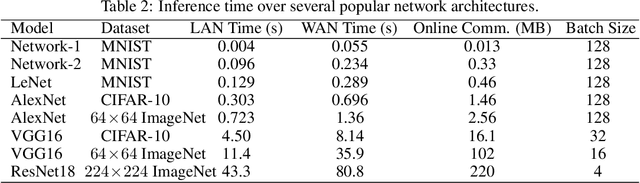

We propose ARIANN, a low-interaction framework to perform private training and inference of standard deep neural networks on sensitive data. This framework implements semi-honest 2-party computation and leverages function secret sharing, a recent cryptographic protocol that only uses lightweight primitives to achieve an efficient online phase with a single message of the size of the inputs, for operations like comparison and multiplication which are building blocks of neural networks. Built on top of PyTorch, it offers a wide range of functions including ReLU, MaxPool and BatchNorm, and allows to use models like AlexNet or ResNet18. We report experimental results for inference and training over distant servers. Last, we propose an extension to support n-party private federated learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge