Are Outlier Detection Methods Resilient to Sampling?

Paper and Code

Jul 31, 2019

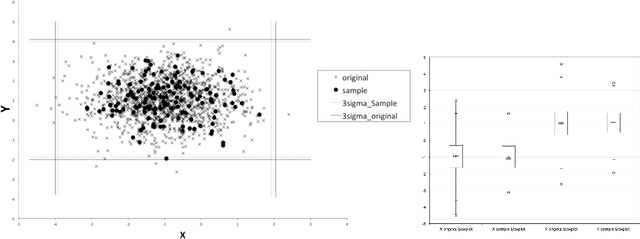

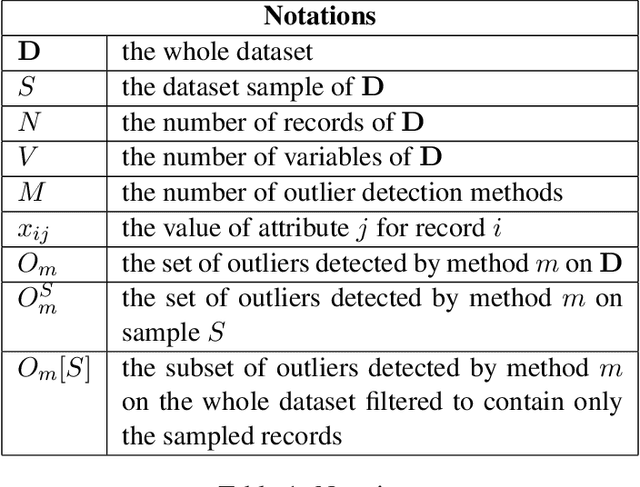

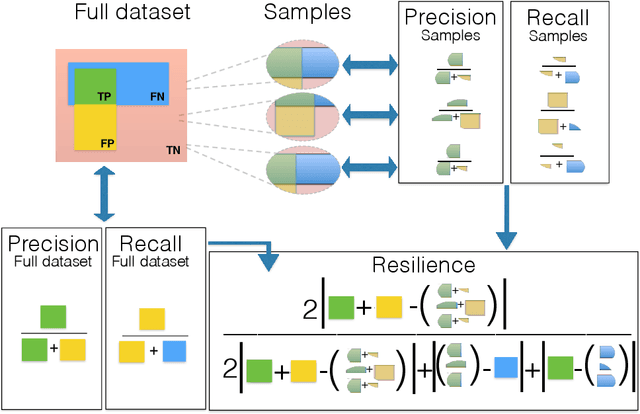

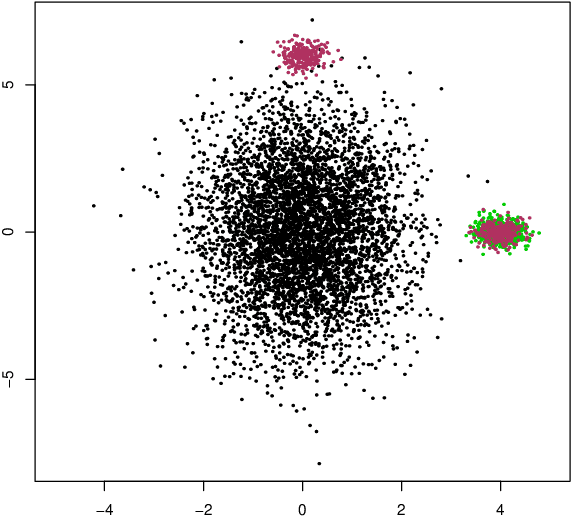

Outlier detection is a fundamental task in data mining and has many applications including detecting errors in databases. While there has been extensive prior work on methods for outlier detection, modern datasets often have sizes that are beyond the ability of commonly used methods to process the data within a reasonable time. To overcome this issue, outlier detection methods can be trained over samples of the full-sized dataset. However, it is not clear how a model trained on a sample compares with one trained on the entire dataset. In this paper, we introduce the notion of resilience to sampling for outlier detection methods. Orthogonal to traditional performance metrics such as precision/recall, resilience represents the extent to which the outliers detected by a method applied to samples from a sampling scheme matches those when applied to the whole dataset. We propose a novel approach for estimating the resilience to sampling of both individual outlier methods and their ensembles. We performed an extensive experimental study on synthetic and real-world datasets where we study seven diverse and representative outlier detection methods, compare results obtained from samples versus those obtained from the whole datasets and evaluate the accuracy of our resilience estimates. We observed that the methods are not equally resilient to a given sampling scheme and it is often the case that careful joint selection of both the sampling scheme and the outlier detection method is necessary. It is our hope that the paper initiates research on designing outlier detection algorithms that are resilient to sampling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge