Are Accuracy and Robustness Correlated?

Paper and Code

Dec 01, 2016

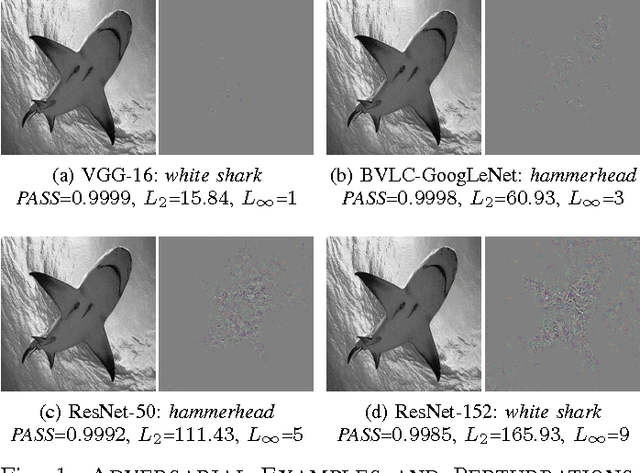

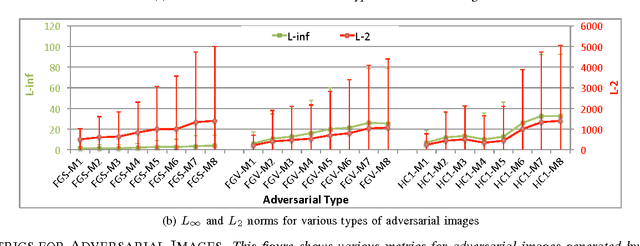

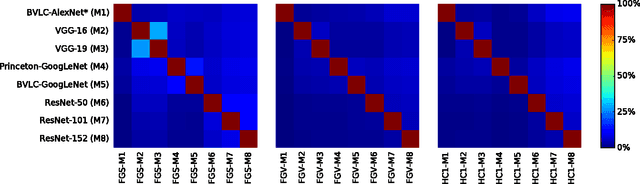

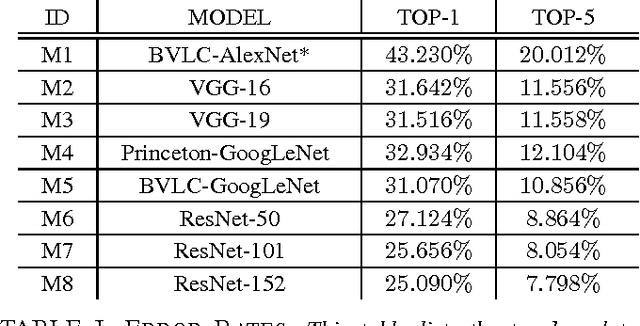

Machine learning models are vulnerable to adversarial examples formed by applying small carefully chosen perturbations to inputs that cause unexpected classification errors. In this paper, we perform experiments on various adversarial example generation approaches with multiple deep convolutional neural networks including Residual Networks, the best performing models on ImageNet Large-Scale Visual Recognition Challenge 2015. We compare the adversarial example generation techniques with respect to the quality of the produced images, and measure the robustness of the tested machine learning models to adversarial examples. Finally, we conduct large-scale experiments on cross-model adversarial portability. We find that adversarial examples are mostly transferable across similar network topologies, and we demonstrate that better machine learning models are less vulnerable to adversarial examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge