Applying the Information Bottleneck Principle to Prosodic Representation Learning

Paper and Code

Aug 05, 2021

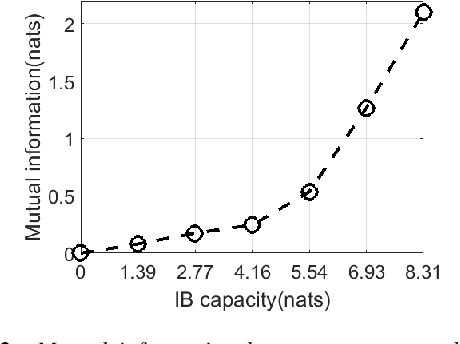

This paper describes a novel design of a neural network-based speech generation model for learning prosodic representation.The problem of representation learning is formulated according to the information bottleneck (IB) principle. A modified VQ-VAE quantized layer is incorporated in the speech generation model to control the IB capacity and adjust the balance between reconstruction power and disentangle capability of the learned representation. The proposed model is able to learn word-level prosodic representations from speech data. With an optimized IB capacity, the learned representations not only are adequate to reconstruct the original speech but also can be used to transfer the prosody onto different textual content. Extensive results of the objective and subjective evaluation are presented to demonstrate the effect of IB capacity control, the effectiveness, and potential usage of the learned prosodic representation in controllable neural speech generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge