Analysis of Software Engineering for Agile Machine Learning Projects

Paper and Code

Dec 16, 2019

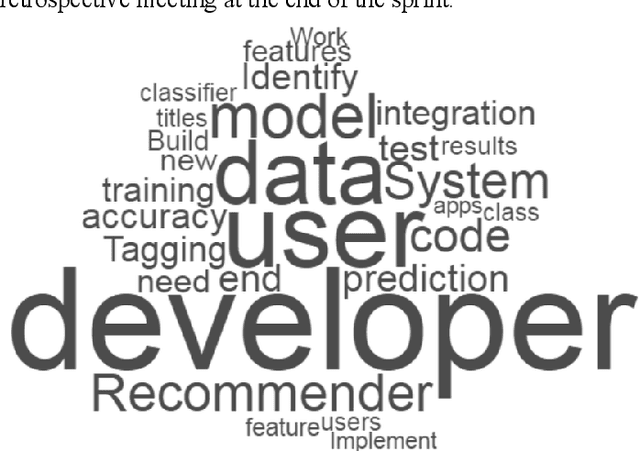

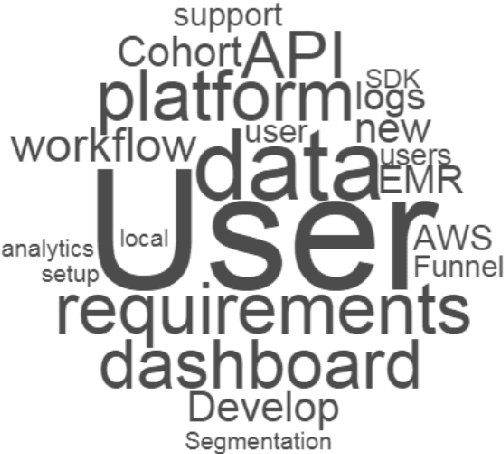

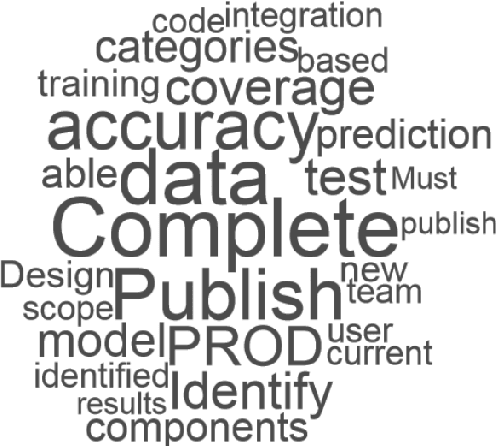

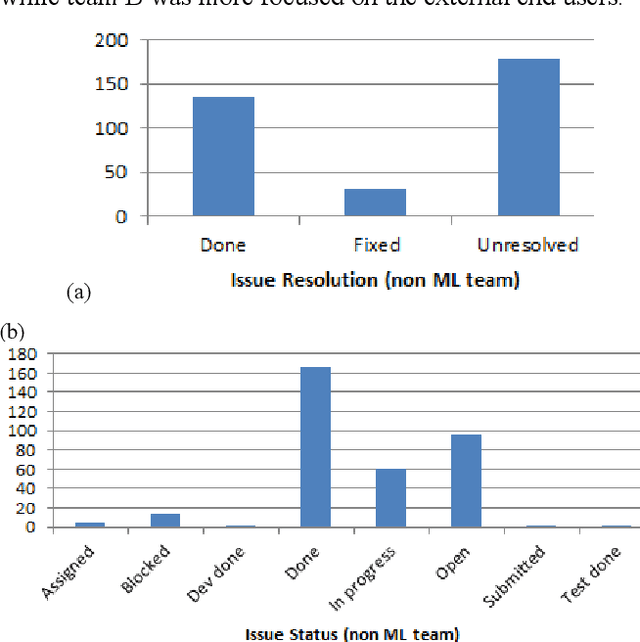

The number of machine learning, artificial intelligence or data science related software engineering projects using Agile methodology is increasing. However, there are very few studies on how such projects work in practice. In this paper, we analyze project issues tracking data taken from Scrum (a popular tool for Agile) for several machine learning projects. We compare this data with corresponding data from non-machine learning projects, in an attempt to analyze how machine learning projects are executed differently from normal software engineering projects. On analysis, we find that machine learning project issues use different kinds of words to describe issues, have higher number of exploratory or research oriented tasks as compared to implementation tasks, and have a higher number of issues in the product backlog after each sprint, denoting that it is more difficult to estimate the duration of machine learning project related tasks in advance. After analyzing this data, we propose a few ways in which Agile machine learning projects can be better logged and executed, given their differences with normal software engineering projects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge