Analysis of Decentralized Stochastic Successive Convex Approximation for composite non-convex problems

Paper and Code

May 11, 2024

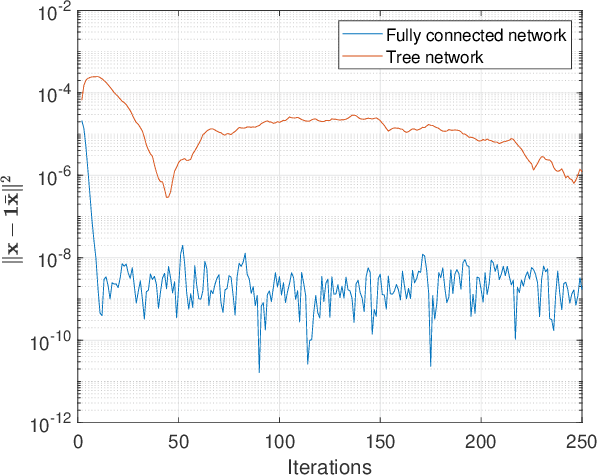

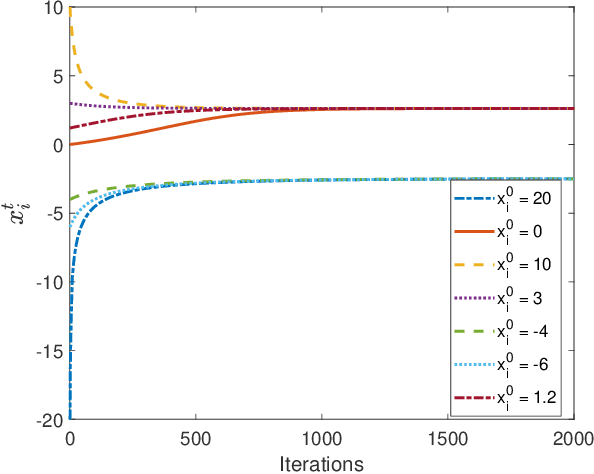

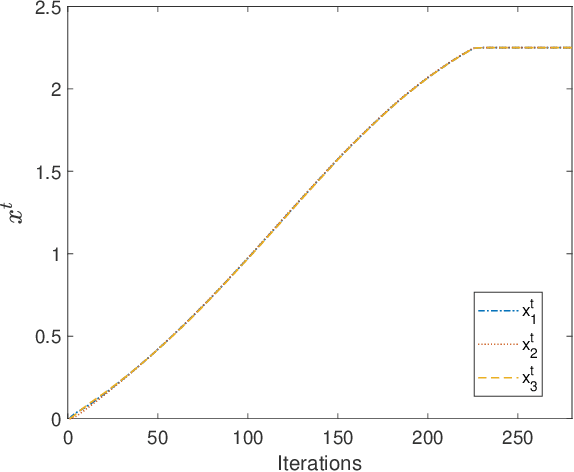

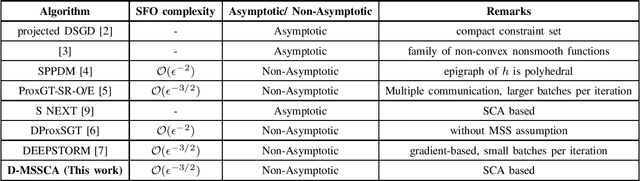

Successive Convex approximation (SCA) methods have shown to improve the empirical convergence of non-convex optimization problems over proximal gradient-based methods. In decentralized optimization, which aims to optimize a global function using only local information, the SCA framework has been successfully applied to achieve improved convergence. Still, the stochastic first order (SFO) complexity of decentralized SCA algorithms has remained understudied. While non-asymptotic convergence analysis has been studied for decentralized deterministic settings, its stochastic counterpart has only been shown to converge asymptotically. We have analyzed a novel accelerated variant of the decentralized stochastic SCA that minimizes the sum of non-convex (possibly smooth) and convex (possibly non-smooth) cost functions. The algorithm viz. Decentralized Momentum-based Stochastic SCA (D-MSSCA), iteratively solves a series of strongly convex subproblems at each node using one sample at each iteration. The key step in non-asymptotic analysis involves proving that the average output state vector moves in the descent direction of the global function. This descent allows us to obtain a bound on average \textit{iterate progress} and \emph{mean-squared stationary gap}. The recursive momentum-based updates at each node contribute to achieving stochastic first order (SFO) complexity of O(\epsilon^{-3/2}) provided that the step sizes are smaller than the given upper bounds. Even with one sample used at each iteration and a non-adaptive step size, the rate is at par with the SFO complexity of decentralized state-of-the-art gradient-based algorithms. The rate also matches the lower bound for the centralized, unconstrained optimization problems. Through a synthetic example, the applicability of D-MSSCA is demonstrated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge