An online learning approach to dynamic pricing and capacity sizing in service systems

Paper and Code

Sep 07, 2020

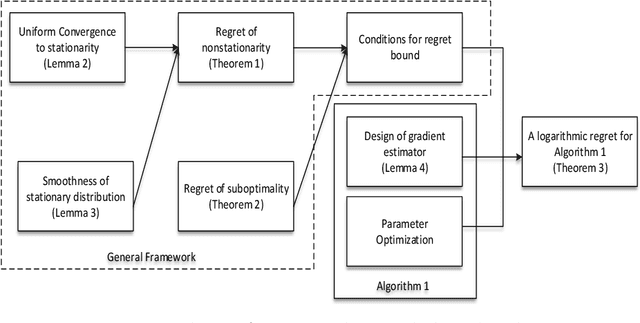

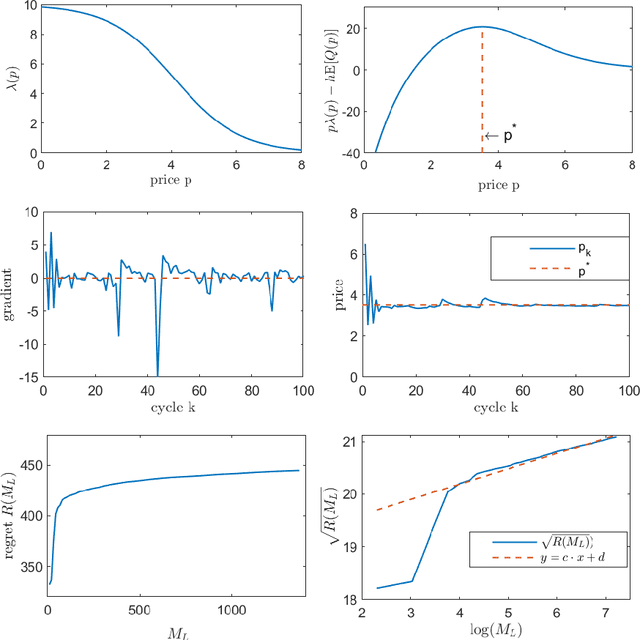

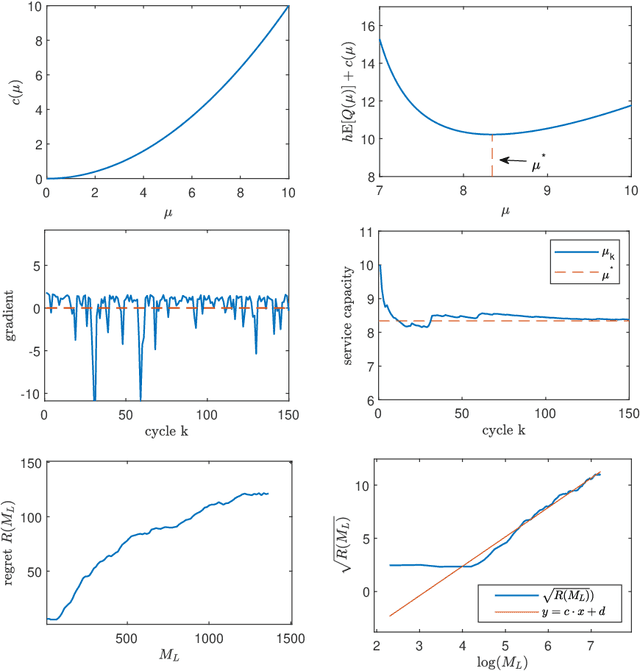

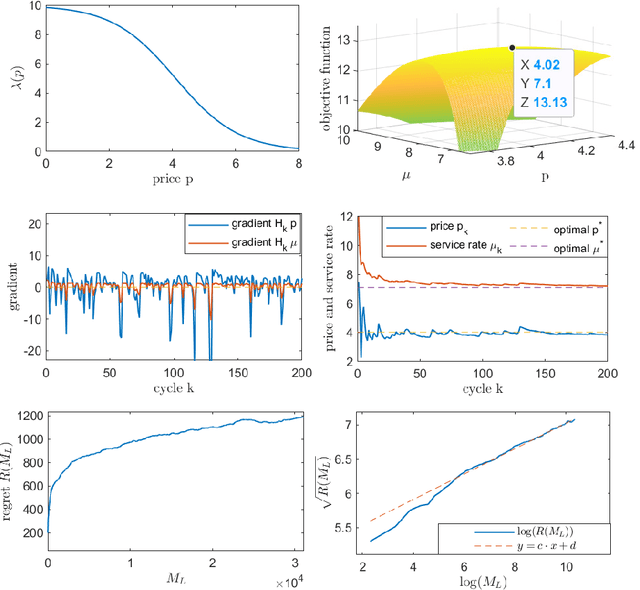

We study a dynamic pricing and capacity sizing problem in a GI/GI/1 queue, where the service provider's objective is to obtain the optimal service fee $p$ and service capacity $\mu$ so as to maximize cumulative expected profit (the service revenue minus the staffing cost and delay penalty). Due to the complex nature of the queueing dynamics, such a problem has no analytic solution so that previous research often resorts to heavy-traffic analysis in that both the arrival rate and service rate are sent to infinity. In this work we propose an online learning framework designed for solving this problem which does not require the system's scale to increase. Our algorithm organizes the time horizon into successive operational cycles and prescribes an efficient procedure to obtain improved pricing and staffing policies in each cycle using data collected in previous cycles. Data here include the number of customer arrivals, waiting times, and the server's busy times. The ingenuity of this approach lies in its online nature, which allows the service provider do better by interacting with the environment. Effectiveness of our online learning algorithm is substantiated by (i) theoretical results including the algorithm convergence and regret analysis (with a logarithmic regret bound), and (ii) engineering confirmation via simulation experiments of a variety of representative GI/GI/1 queues.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge