An Inertial Newton Algorithm for Deep Learning

Paper and Code

May 29, 2019

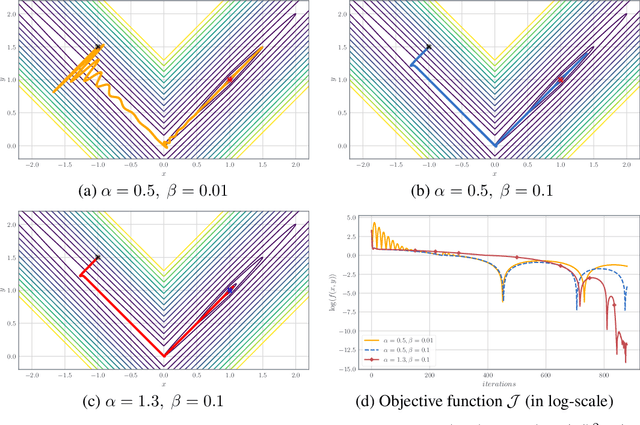

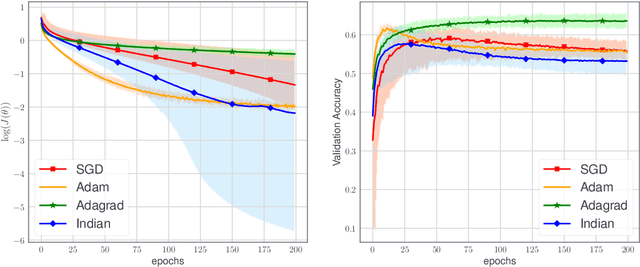

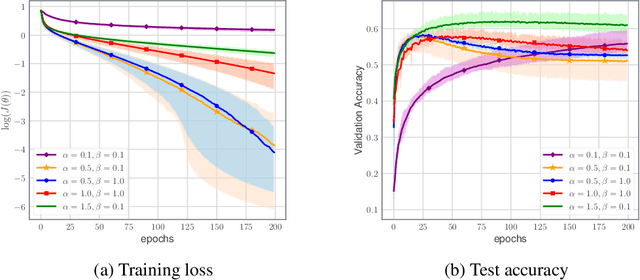

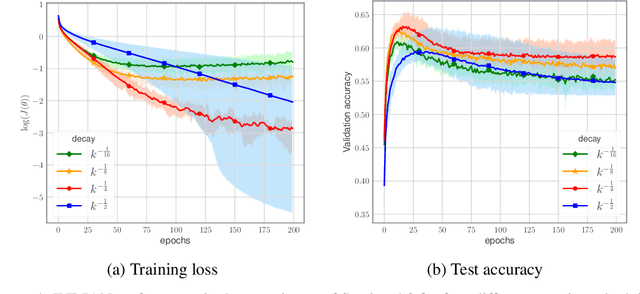

We devise a learning algorithm for possibly nonsmooth deep neural networks featuring inertia and Newtonian directional intelligence only by means of a back-propagation oracle. Our algorithm, called INDIAN, has an appealing mechanical interpretation, making the role of its two hyperparameters transparent. An elementary phase space lifting allows both for its implementation and its theoretical study under very general assumptions. We handle in particular a stochastic version of our method (which encompasses usual mini-batch approaches) for nonsmooth activation functions (such as ReLU). Our algorithm shows high efficiency and reaches state of the art on image classification problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge