An adaptive transport framework for joint and conditional density estimation

Paper and Code

Sep 22, 2020

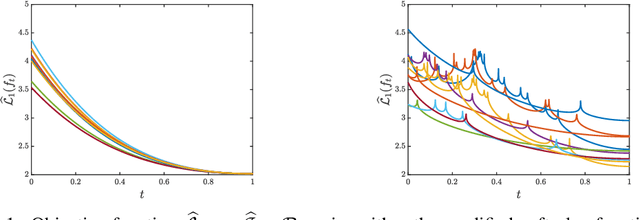

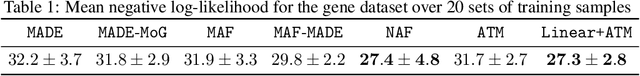

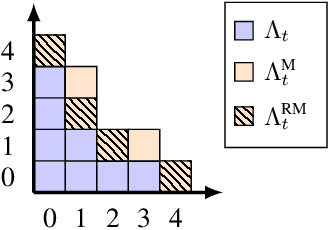

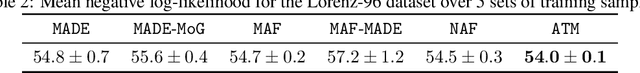

We propose a general framework to robustly characterize joint and conditional probability distributions via transport maps. Transport maps or "flows" deterministically couple two distributions via an expressive monotone transformation. Yet, learning the parameters of such transformations in high dimensions is challenging given few samples from the unknown target distribution, and structural choices for these transformations can have a significant impact on performance. Here we formulate a systematic framework for representing and learning monotone maps, via invertible transformations of smooth functions, and demonstrate that the associated minimization problem has a unique global optimum. Given a hierarchical basis for the appropriate function space, we propose a sample-efficient adaptive algorithm that estimates a sparse approximation for the map. We demonstrate how this framework can learn densities with stable generalization performance across a wide range of sample sizes on real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge