An Adaptive Clipping Approach for Proximal Policy Optimization

Paper and Code

Apr 17, 2018

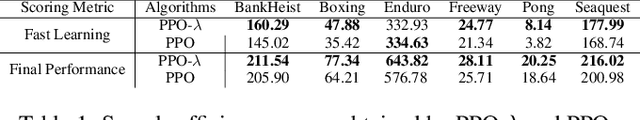

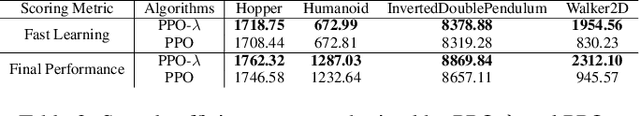

Very recently proximal policy optimization (PPO) algorithms have been proposed as first-order optimization methods for effective reinforcement learning. While PPO is inspired by the same learning theory that justifies trust region policy optimization (TRPO), PPO substantially simplifies algorithm design and improves data efficiency by performing multiple epochs of \emph{clipped policy optimization} from sampled data. Although clipping in PPO stands for an important new mechanism for efficient and reliable policy update, it may fail to adaptively improve learning performance in accordance with the importance of each sampled state. To address this issue, a new surrogate learning objective featuring an adaptive clipping mechanism is proposed in this paper, enabling us to develop a new algorithm, known as PPO-$\lambda$. PPO-$\lambda$ optimizes policies repeatedly based on a theoretical target for adaptive policy improvement. Meanwhile, destructively large policy update can be effectively prevented through both clipping and adaptive control of a hyperparameter $\lambda$ in PPO-$\lambda$, ensuring high learning reliability. PPO-$\lambda$ enjoys the same simple and efficient design as PPO. Empirically on several Atari game playing tasks and benchmark control tasks, PPO-$\lambda$ also achieved clearly better performance than PPO.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge