AlphaNet: An Attention Guided Deep Network for Automatic Image Matting

Paper and Code

Mar 07, 2020

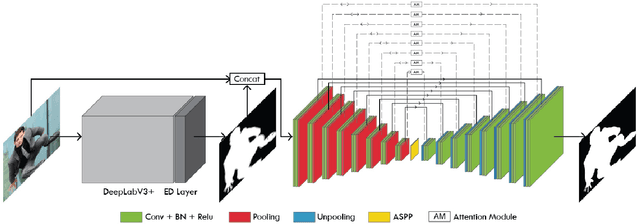

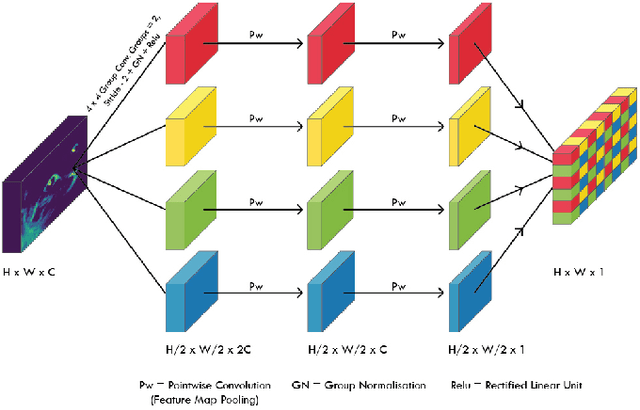

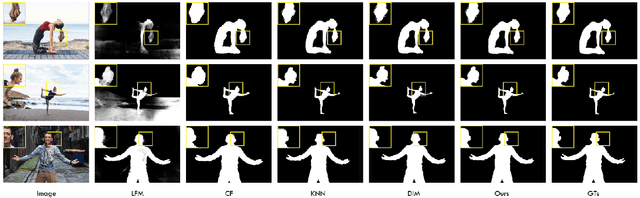

In this paper, we propose an end to end solution for image matting i.e high-precision extraction of foreground objects from natural images. Image matting and background detection can be achieved easily through chroma keying in a studio setting when the background is either pure green or blue. Nonetheless, image matting in natural scenes with complex and uneven depth backgrounds remains a tedious task that requires human intervention. To achieve complete automatic foreground extraction in natural scenes, we propose a method that assimilates semantic segmentation and deep image matting processes into a single network to generate detailed semantic mattes for image composition task. The contribution of our proposed method is two-fold, firstly it can be interpreted as a fully automated semantic image matting method and secondly as a refinement of existing semantic segmentation models. We propose a novel model architecture as a combination of segmentation and matting that unifies the function of upsampling and downsampling operators with the notion of attention. As shown in our work, attention guided downsampling and upsampling can extract high-quality boundary details, unlike other normal downsampling and upsampling techniques. For achieving the same, we utilized an attention guided encoder-decoder framework which does unsupervised learning for generating an attention map adaptively from the data to serve and direct the upsampling and downsampling operators. We also construct a fashion e-commerce focused dataset with high-quality alpha mattes to facilitate the training and evaluation for image matting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge