Alpha Entropy Search for New Information-based Bayesian Optimization

Paper and Code

Nov 25, 2024

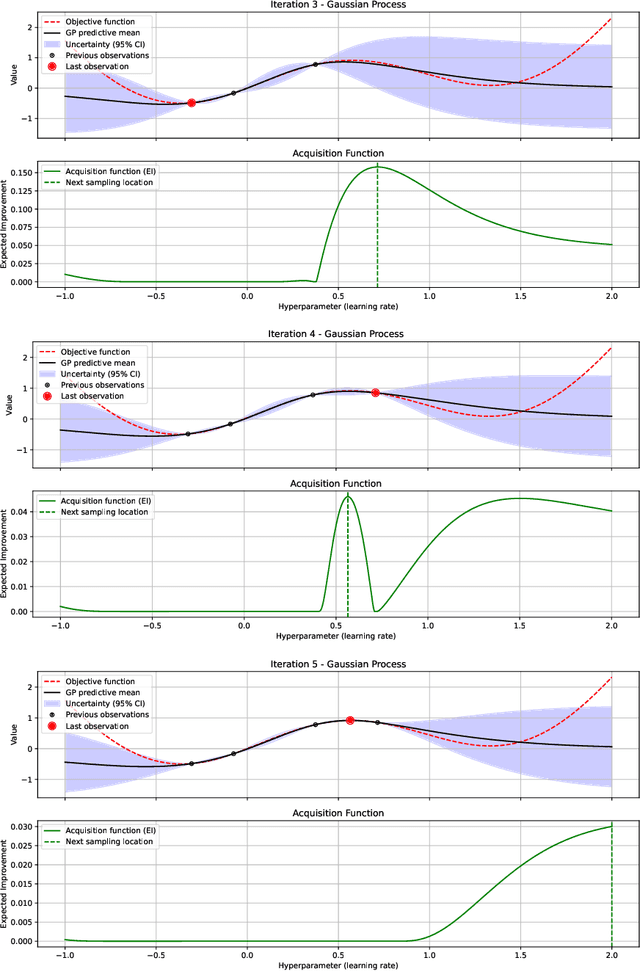

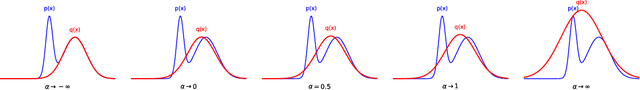

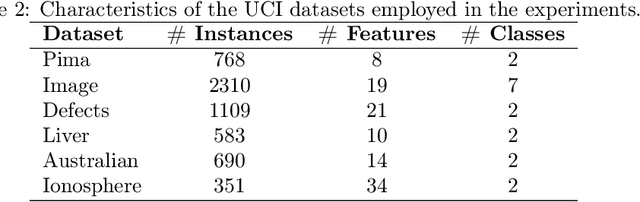

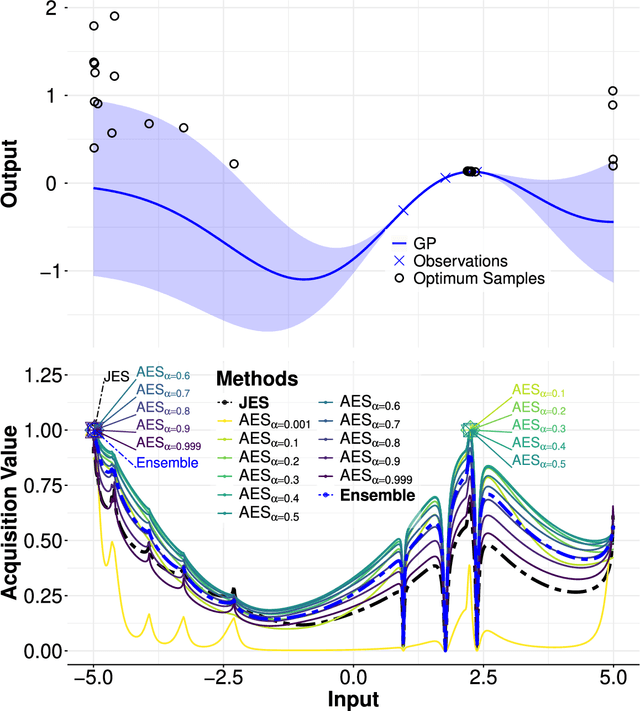

Bayesian optimization (BO) methods based on information theory have obtained state-of-the-art results in several tasks. These techniques heavily rely on the Kullback-Leibler (KL) divergence to compute the acquisition function. In this work, we introduce a novel information-based class of acquisition functions for BO called Alpha Entropy Search (AES). AES is based on the {\alpha}-divergence, that generalizes the KL divergence. Iteratively, AES selects the next evaluation point as the one whose associated target value has the highest level of the dependency with respect to the location and associated value of the global maximum of the optimization problem. Dependency is measured in terms of the {\alpha}-divergence, as an alternative to the KL divergence. Intuitively, this favors the evaluation of the objective function at the most informative points about the global maximum. The {\alpha}-divergence has a free parameter {\alpha}, which determines the behavior of the divergence, trading-off evaluating differences between distributions at a single mode, and evaluating differences globally. Therefore, different values of {\alpha} result in different acquisition functions. AES acquisition lacks a closed-form expression. However, we propose an efficient and accurate approximation using a truncated Gaussian distribution. In practice, the value of {\alpha} can be chosen by the practitioner, but here we suggest to use a combination of acquisition functions obtained by simultaneously considering a range of values of {\alpha}. We provide an implementation of AES in BOTorch and we evaluate its performance in both synthetic, benchmark and real-world experiments involving the tuning of the hyper-parameters of a deep neural network. These experiments show that the performance of AES is competitive with respect to other information-based acquisition functions such as JES, MES or PES.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge