Aggregation of Multiple Knockoffs

Paper and Code

Feb 21, 2020

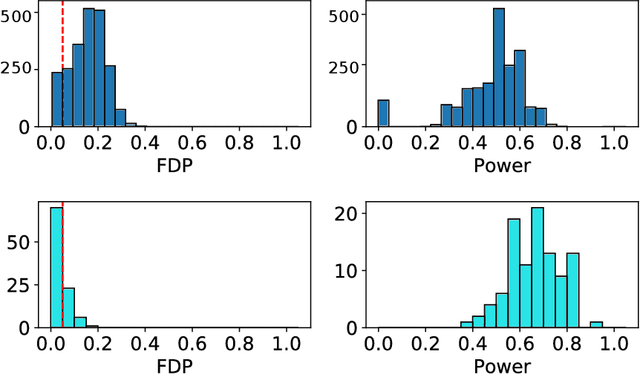

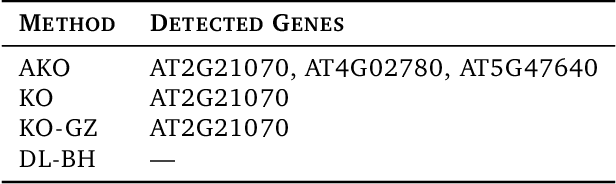

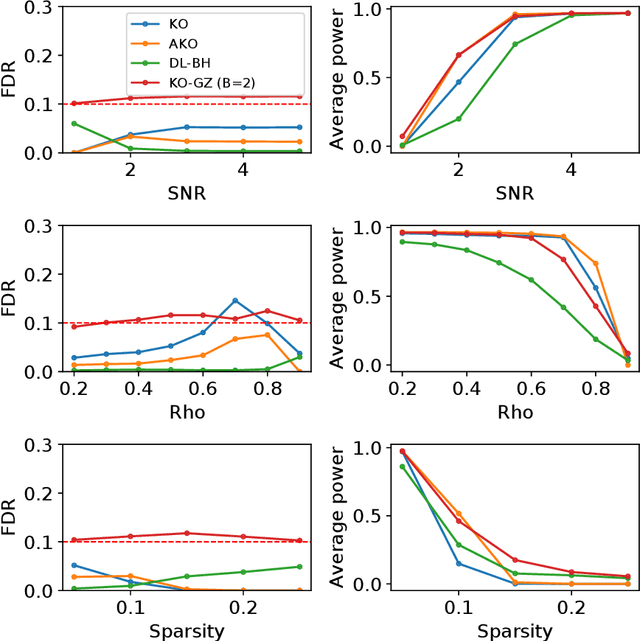

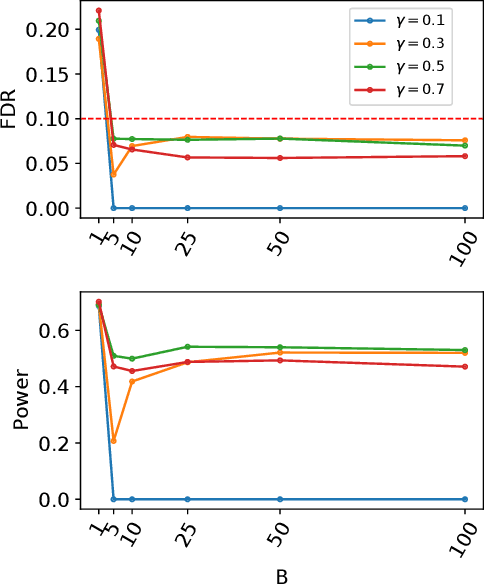

We develop an extension of the Knockoff Inference procedure, introduced by Barber and Candes (2015). This new method, called Aggregation of Multiple Knockoffs (AKO), addresses the instability inherent to the random nature of Knockoff-based inference. Specifically, AKO improves both the stability and power compared with the original Knockoff algorithm while still maintaining guarantees for False Discovery Rate control. We provide a new inference procedure, prove its core properties, and demonstrate its benefits in a set of experiments on synthetic and real datasets.

* Under review

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge