AffectNet+: A Database for Enhancing Facial Expression Recognition with Soft-Labels

Paper and Code

Oct 29, 2024

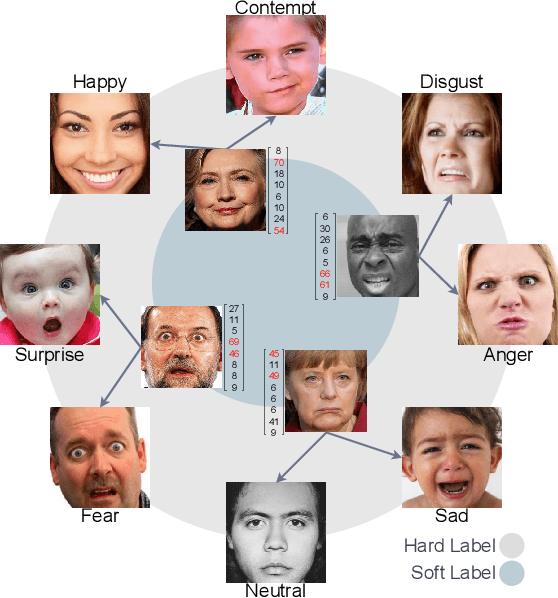

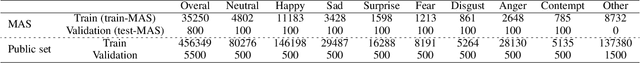

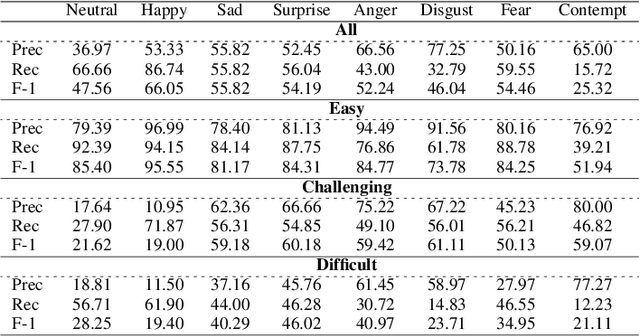

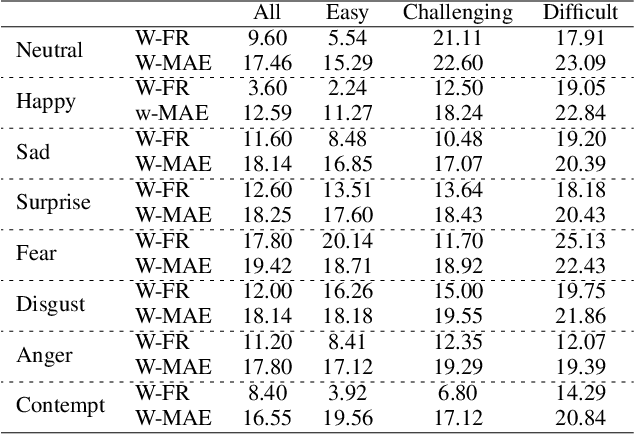

Automated Facial Expression Recognition (FER) is challenging due to intra-class variations and inter-class similarities. FER can be especially difficult when facial expressions reflect a mixture of various emotions (aka compound expressions). Existing FER datasets, such as AffectNet, provide discrete emotion labels (hard-labels), where a single category of emotion is assigned to an expression. To alleviate inter- and intra-class challenges, as well as provide a better facial expression descriptor, we propose a new approach to create FER datasets through a labeling method in which an image is labeled with more than one emotion (called soft-labels), each with different confidences. Specifically, we introduce the notion of soft-labels for facial expression datasets, a new approach to affective computing for more realistic recognition of facial expressions. To achieve this goal, we propose a novel methodology to accurately calculate soft-labels: a vector representing the extent to which multiple categories of emotion are simultaneously present within a single facial expression. Finding smoother decision boundaries, enabling multi-labeling, and mitigating bias and imbalanced data are some of the advantages of our proposed method. Building upon AffectNet, we introduce AffectNet+, the next-generation facial expression dataset. This dataset contains soft-labels, three categories of data complexity subsets, and additional metadata such as age, gender, ethnicity, head pose, facial landmarks, valence, and arousal. AffectNet+ will be made publicly accessible to researchers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge