Adversarial Test on Learnable Image Encryption

Paper and Code

Jul 31, 2019

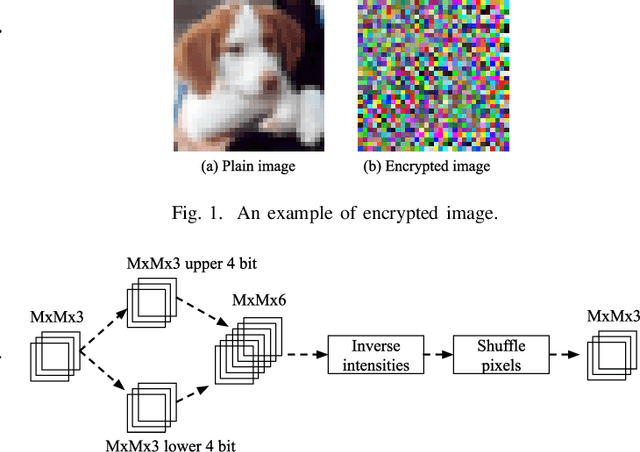

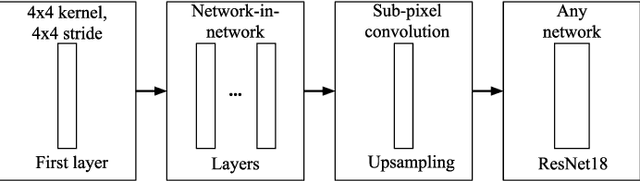

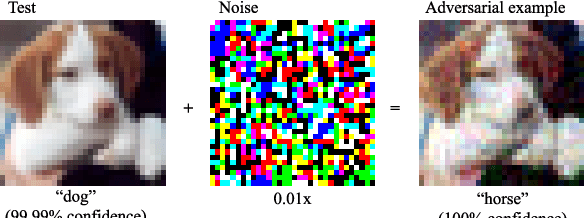

Data for deep learning should be protected for privacy preserving. Researchers have come up with the notion of learnable image encryption to satisfy the requirement. However, existing privacy preserving approaches have never considered the threat of adversarial attacks. In this paper, we ran an adversarial test on learnable image encryption in five different scenarios. The results show different behaviors of the network in the variable key scenarios and suggest learnable image encryption provides certain level of adversarial robustness.

* To be appeared in 2019 IEEE 8th Global Conference on Consumer

Electronics (GCCE 2019)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge