Adversarial Black-Box Attacks for Automatic Speech Recognition Systems Using Multi-Objective Genetic Optimization

Paper and Code

Nov 04, 2018

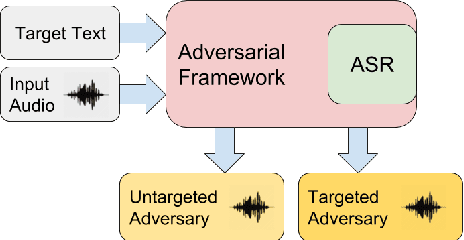

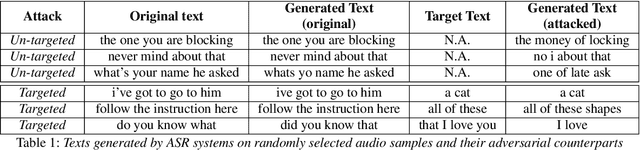

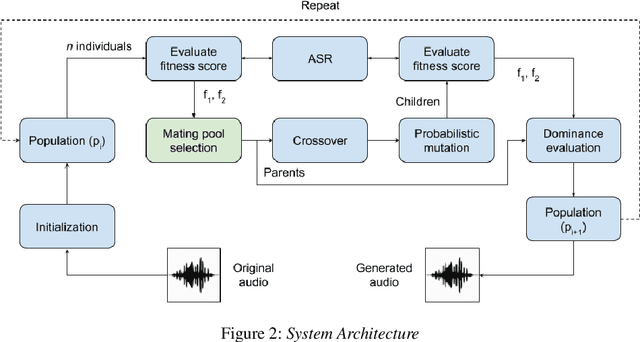

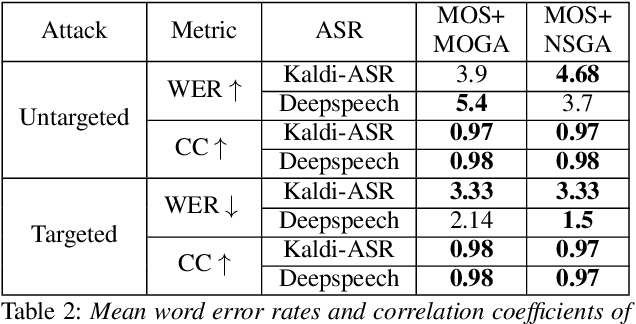

Fooling deep neural networks with adversarial input have exposed a significant vulnerability in current state-of-the-art systems in multiple domains. Both black-box and white-box approaches have been used to either replicate the model itself or to craft examples which cause the model to fail. In this work, we use a multi-objective genetic algorithm based approach to perform both targeted and un-targeted black-box attacks on automatic speech recognition (ASR) systems. The main contribution of this research is the proposal of a generic framework which can be used to attack any ASR system, even if it's internal working is hidden. During the un-targeted attacks, the Word Error Rates (WER) of the ASR degrades from 0.5 to 5.4, indicating the potency of our approach. In targeted attacks, our solution reaches a WER of 2.14. In both attacks, the adversarial samples maintain a high acoustic similarity of 0.98 and 0.97.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge