AdaVAE: Exploring Adaptive GPT-2s in Variational Auto-Encoders for Language Modeling

Paper and Code

May 20, 2022

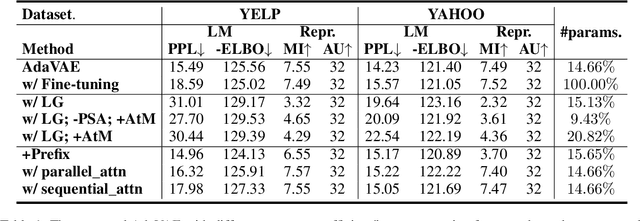

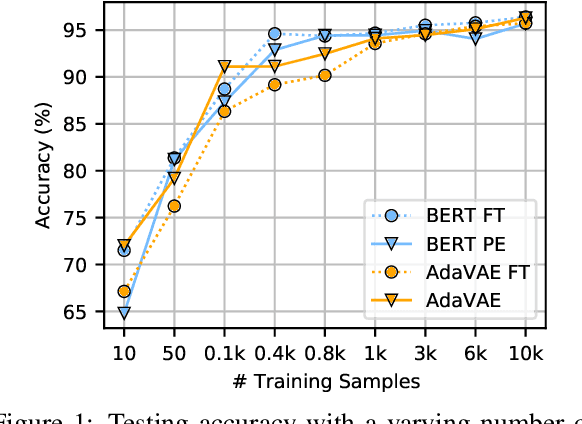

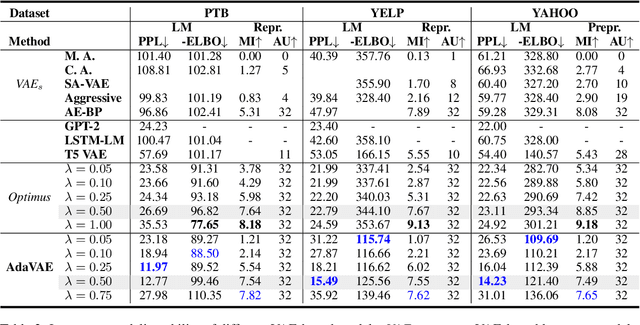

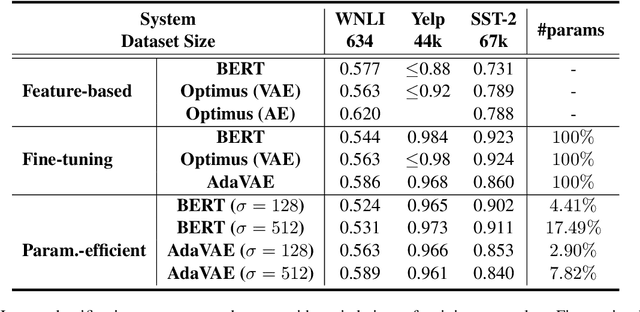

Variational Auto-Encoder (VAE) has become the de-facto learning paradigm in achieving both representation learning and generation for natural language. However, existing VAE-based language models either employ elementary RNNs, which is not powerful to handle complex situations, or fine-tunes two pre-trained language models (PLMs) for any downstream task, which is a huge drain on resources. In this paper, we introduce the first VAE framework empowered with adaptive GPT-2s (AdaVAE). Different from existing systems, we unify both the encoder\&decoder of VAE model using GPT-2s with adaptive parameter-efficient components. Experiments from multiple dimensions validate that AdaVAE is competent to better organize language in generation task and representation modeling, even with less than $15\%$ activated parameters in training. Our code is available at \url{https://github.com/ImKeTT/adavae}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge