AdaSparse: Learning Adaptively Sparse Structures for Multi-Domain Click-Through Rate Prediction

Paper and Code

Jul 01, 2022

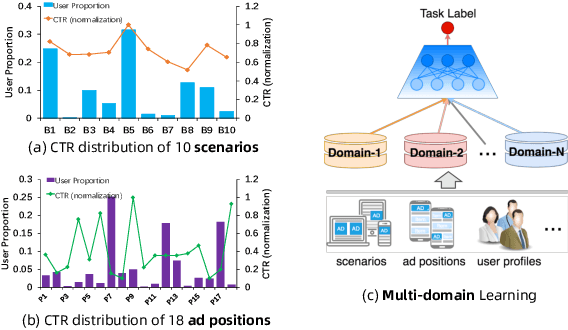

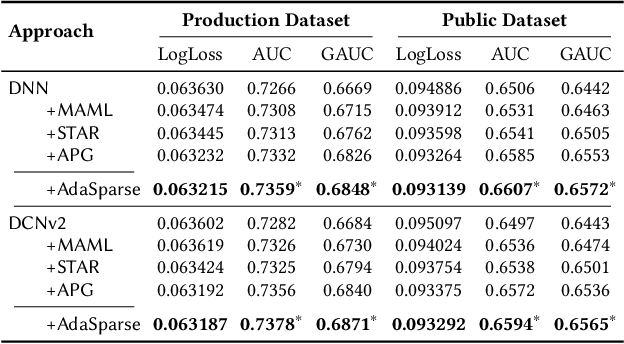

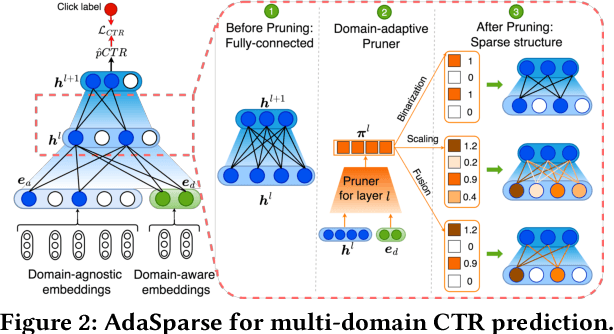

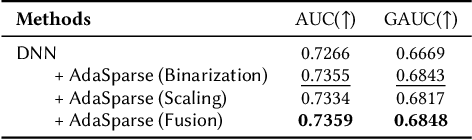

Click-through rate (CTR) prediction is a fundamental technique in recommendation and advertising systems. Recent studies have proved that learning a unified model to serve multiple domains is effective to improve the overall performance. However, it is still challenging to improve generalization across domains under limited training data, and hard to deploy current solutions due to their computational complexity. In this paper, we propose a simple yet effective framework AdaSparse for multi-domain CTR prediction, which learns adaptively sparse structure for each domain, achieving better generalization across domains with lower computational cost. In AdaSparse, we introduce domain-aware neuron-level weighting factors to measure the importance of neurons, with that for each domain our model can prune redundant neurons to improve generalization. We further add flexible sparsity regularizations to control the sparsity ratio of learned structures. Offline and online experiments show that AdaSparse outperforms previous multi-domain CTR models significantly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge