Adaptive Label Smoothing for Out-of-Distribution Detection

Paper and Code

Oct 08, 2024

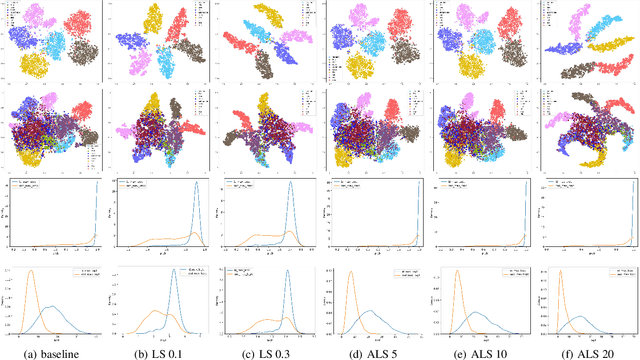

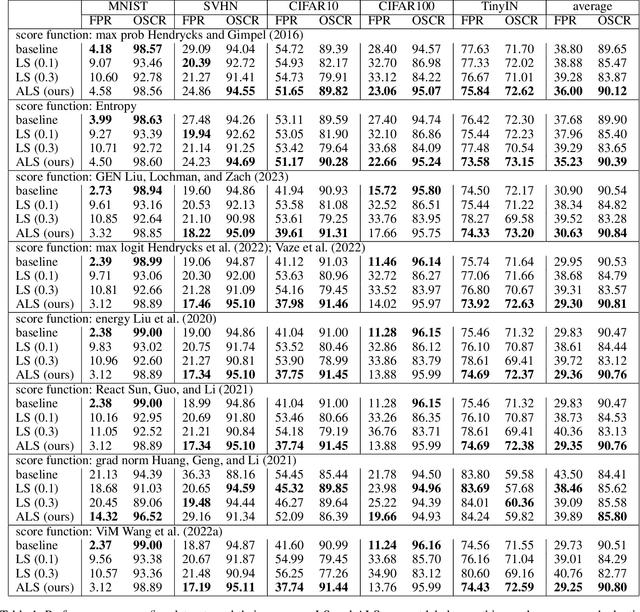

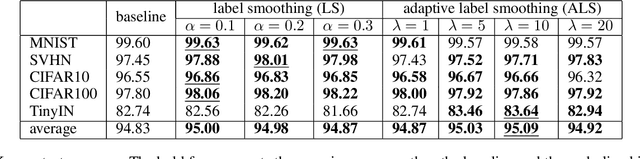

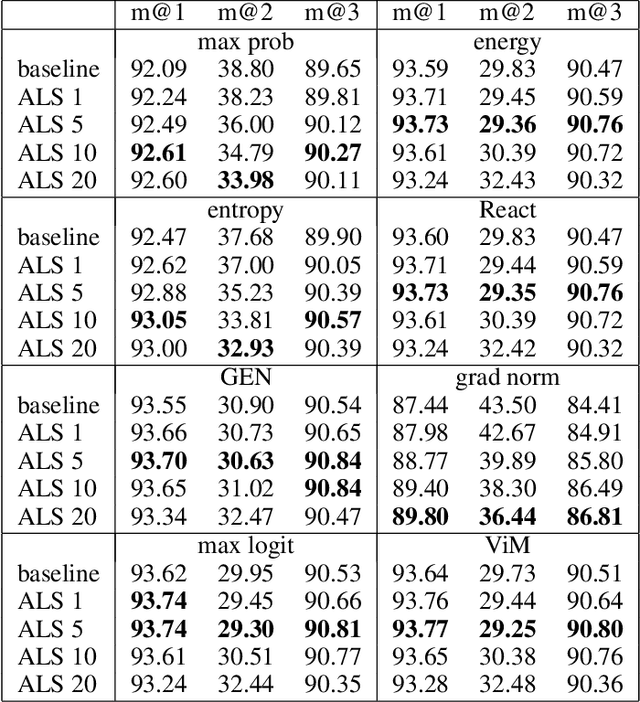

Out-of-distribution (OOD) detection, which aims to distinguish unknown classes from known classes, has received increasing attention recently. A main challenge within is the unavailable of samples from the unknown classes in the training process, and an effective strategy is to improve the performance for known classes. Using beneficial strategies such as data augmentation and longer training is thus a way to improve OOD detection. However, label smoothing, an effective method for classifying known classes, degrades the performance of OOD detection, and this phenomenon is under exploration. In this paper, we first analyze that the limited and predefined learning target in label smoothing results in the smaller maximal probability and logit, which further leads to worse OOD detection performance. To mitigate this issue, we then propose a novel regularization method, called adaptive label smoothing (ALS), and the core is to push the non-true classes to have same probabilities whereas the maximal probability is neither fixed nor limited. Extensive experimental results in six datasets with two backbones suggest that ALS contributes to classifying known samples and discerning unknown samples with clear margins. Our code will be available to the public.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge