Achieving Model Fairness in Vertical Federated Learning

Paper and Code

Sep 25, 2021

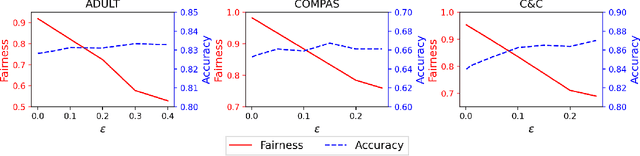

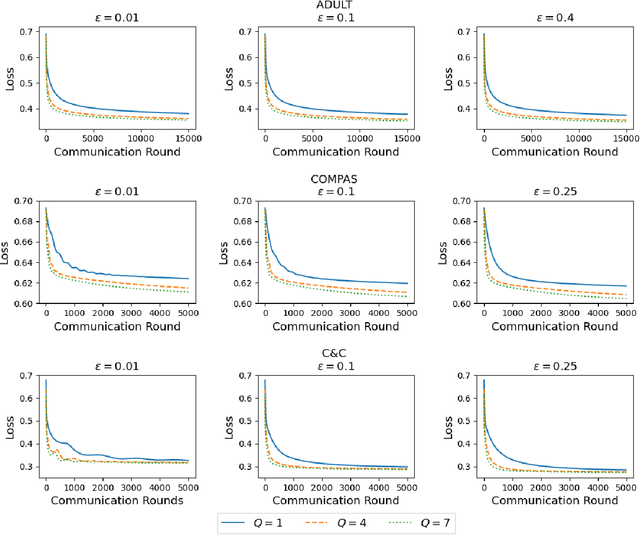

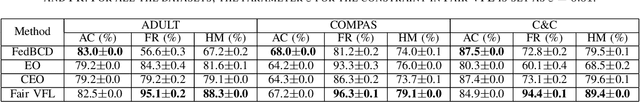

Vertical federated learning (VFL), which enables multiple enterprises possessing non-overlapped features to strengthen their machine learning models without disclosing their private data and model parameters, has received increasing attention lately. Similar to other machine learning algorithms, VFL suffers from fairness issues, i.e., the learned model may be unfairly discriminatory over the group with sensitive attributes. To tackle this problem, we propose a fair VFL framework in this work. First, we systematically formulate the problem of training fair models in VFL, where the learning task is modeled as a constrained optimization problem. To solve it in a federated manner, we consider its equivalent dual form and develop an asynchronous gradient coordinate-descent ascent algorithm, where each data party performs multiple parallelized local updates per communication round to effectively reduce the number of communication rounds. We prove that the algorithm finds a $\delta$-stationary point of the dual objective in $\mathcal{O}(\delta^{-4})$ communication rounds under mild conditions. Finally, extensive experiments on three benchmark datasets demonstrate the superior performance of our method in training fair models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge