Achieving Downstream Fairness with Geometric Repair

Paper and Code

Mar 14, 2022

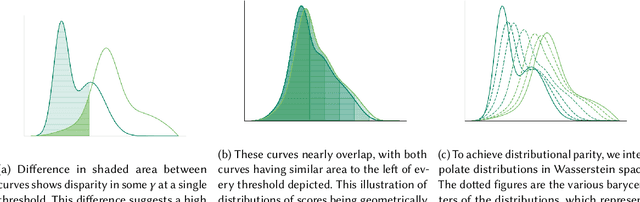

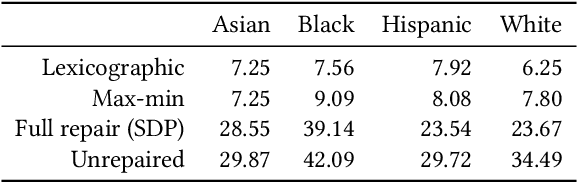

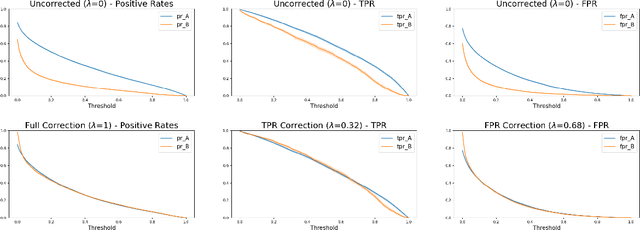

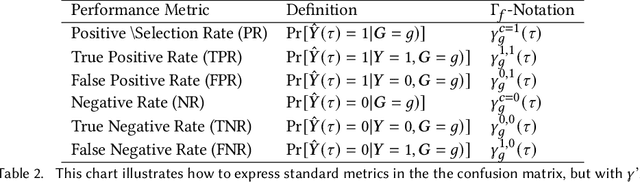

Consider a scenario where some upstream model developer must train a fair model, but is unaware of the fairness requirements of a downstream model user or stakeholder. In the context of fair classification, we present a technique that specifically addresses this setting, by post-processing a regressor's scores such they yield fair classifications for any downstream choice in decision threshold. To begin, we leverage ideas from optimal transport to show how this can be achieved for binary protected groups across a broad class of fairness metrics. Then, we extend our approach to address the setting where a protected attribute takes on multiple values, by re-recasting our technique as a convex optimization problem that leverages lexicographic fairness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge