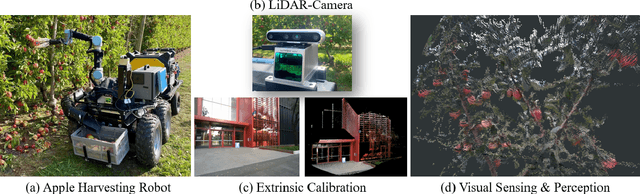

Accurate Fruit Localisation for Robotic Harvesting using High Resolution LiDAR-Camera Fusion

Paper and Code

May 01, 2022

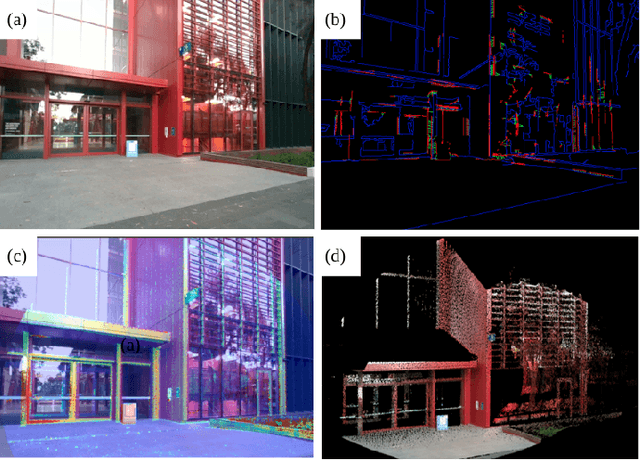

Accurate depth-sensing plays a crucial role in securing a high success rate of robotic harvesting in natural orchard environments. Solid-state LiDAR (SSL), a recently introduced LiDAR technique, can perceive high-resolution geometric information of the scenes, which can be potential utilised to receive accurate depth information. Meanwhile, the fusion of the sensory information from LiDAR and camera can significantly enhance the sensing ability of the harvesting robots. This work introduces a LiDAR-camera fusion-based visual sensing and perception strategy to perform accurate fruit localisation for a harvesting robot in the apple orchards. Two SOTA extrinsic calibration methods, target-based and targetless-based, are applied and evaluated to obtain the accurate extrinsic matrix between the LiDAR and camera. With the extrinsic calibration, the point clouds and color images are fused to perform fruit localisation using a one-stage instance segmentation network. Experimental shows that LiDAR-camera achieves better quality on visual sensing in the natural environments. Meanwhile, introducing the LiDAR-camera fusion largely improves the accuracy and robustness of the fruit localisation. Specifically, the standard deviations of fruit localisation by using LiDAR-camera at 0.5 m, 1.2 m, and 1.8 m are 0.245, 0.227, and 0.275 cm respectively. These measurement error is only one one fifth of that from Realsense D455. Lastly, we have attached our visualised point cloud to demonstrate the highly accurate sensing method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge