ACCSAMS: Automatic Conversion of Exam Documents to Accessible Learning Material for Blind and Visually Impaired

Paper and Code

May 29, 2024

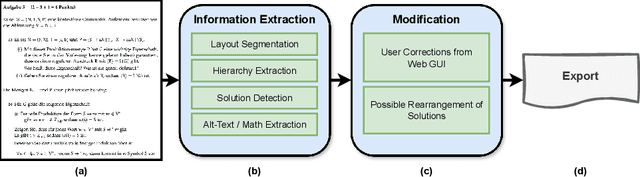

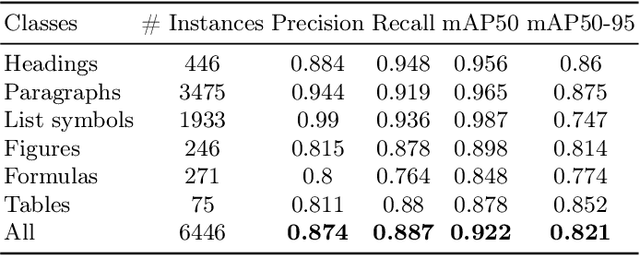

Exam documents are essential educational materials for exam preparation. However, they pose a significant academic barrier for blind and visually impaired students, as they are often created without accessibility considerations. Typically, these documents are incompatible with screen readers, contain excessive white space, and lack alternative text for visual elements. This situation frequently requires intervention by experienced sighted individuals to modify the format and content for accessibility. We propose ACCSAMS, a semi-automatic system designed to enhance the accessibility of exam documents. Our system offers three key contributions: (1) creating an accessible layout and removing unnecessary white space, (2) adding navigational structures, and (3) incorporating alternative text for visual elements that were previously missing. Additionally, we present the first multilingual manually annotated dataset, comprising 1,293 German and 900 English exam documents which could serve as a good training source for deep learning models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge