Accelerating Deep Reinforcement Learning With the Aid of a Partial Model: Power-Efficient Predictive Video Streaming

Paper and Code

Mar 21, 2020

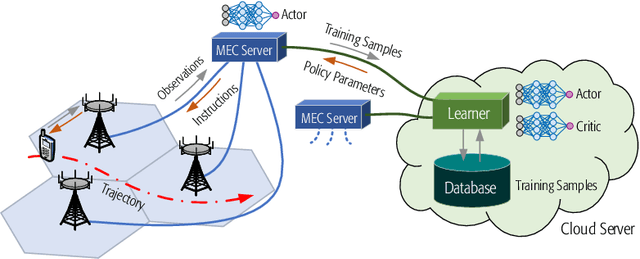

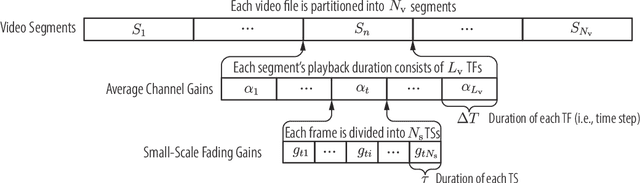

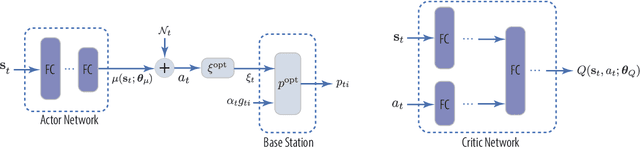

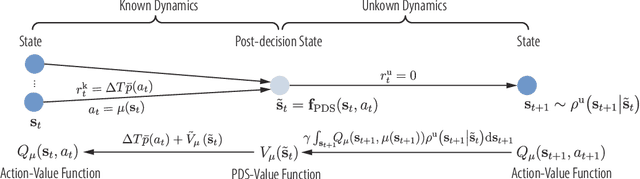

Predictive power allocation is conceived for power-efficient video streaming over mobile networks using deep reinforcement learning. The goal is to minimize the accumulated energy consumption over a complete video streaming session for a mobile user under the quality of service constraint that avoids video playback interruptions. To handle the continuous state and action spaces, we resort to deep deterministic policy gradient (DDPG) algorithm for solving the formulated problem. In contrast to previous predictive resource policies that first predict future information with historical data and then optimize the policy based on the predicted information, the proposed policy operates in an on-line and end-to-end manner. By judiciously designing the action and state that only depend on slowly-varying average channel gains, the signaling overhead between the edge server and the base stations can be reduced, and the dynamics of the system can be learned effortlessly. To improve the robustness of streaming and accelerate learning, we further exploit the partially known dynamics of the system by integrating the concepts of safer layer, post-decision state, and virtual experience into the basic DDPG algorithm. Our simulation results show that the proposed policies converge to the optimal policy derived based on perfect prediction of the future large-scale channel gains and outperforms the first-predict-then-optimize policy in the presence of prediction errors. By harnessing the partially known model of the system dynamics, the convergence speed can be dramatically improved.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge