AANet: Aggregation and Alignment Network with Semi-hard Positive Sample Mining for Hierarchical Place Recognition

Paper and Code

Oct 08, 2023

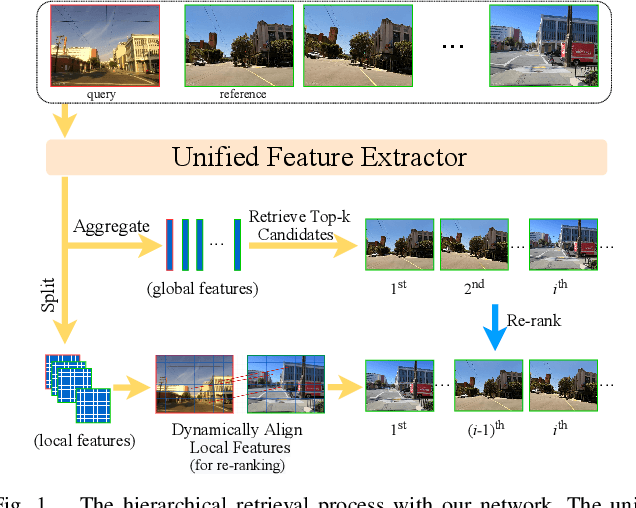

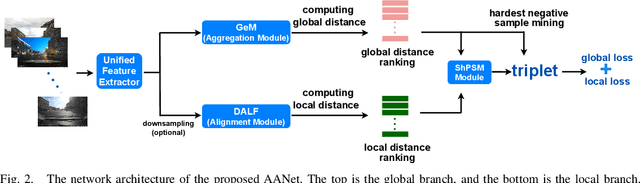

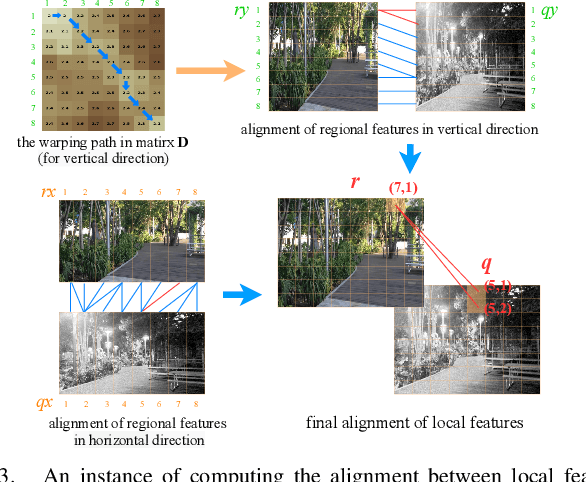

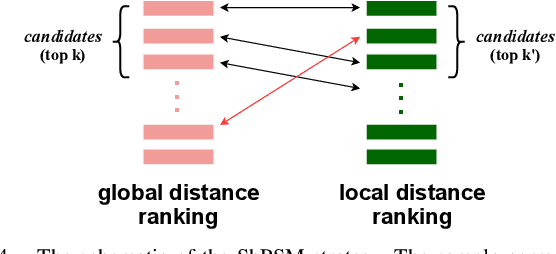

Visual place recognition (VPR) is one of the research hotspots in robotics, which uses visual information to locate robots. Recently, the hierarchical two-stage VPR methods have become popular in this field due to the trade-off between accuracy and efficiency. These methods retrieve the top-k candidate images using the global features in the first stage, then re-rank the candidates by matching the local features in the second stage. However, they usually require additional algorithms (e.g. RANSAC) for geometric consistency verification in re-ranking, which is time-consuming. Here we propose a Dynamically Aligning Local Features (DALF) algorithm to align the local features under spatial constraints. It is significantly more efficient than the methods that need geometric consistency verification. We present a unified network capable of extracting global features for retrieving candidates via an aggregation module and aligning local features for re-ranking via the DALF alignment module. We call this network AANet. Meanwhile, many works use the simplest positive samples in triplet for weakly supervised training, which limits the ability of the network to recognize harder positive pairs. To address this issue, we propose a Semi-hard Positive Sample Mining (ShPSM) strategy to select appropriate hard positive images for training more robust VPR networks. Extensive experiments on four benchmark VPR datasets show that the proposed AANet can outperform several state-of-the-art methods with less time consumption. The code is released at https://github.com/Lu-Feng/AANet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge