A4T: Hierarchical Affordance Detection for Transparent Objects Depth Reconstruction and Manipulation

Paper and Code

Jul 11, 2022

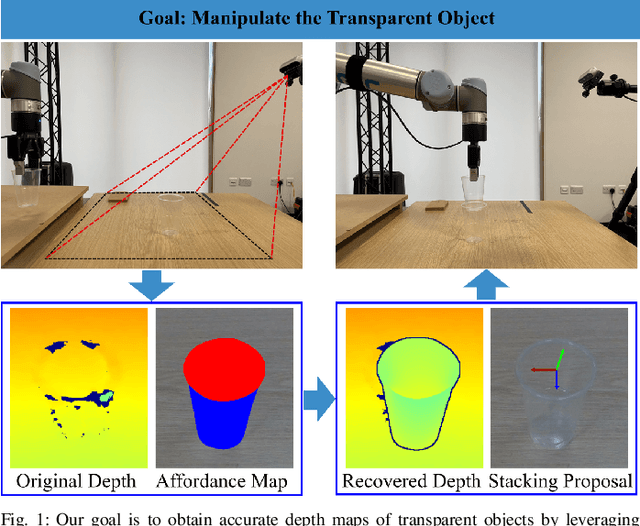

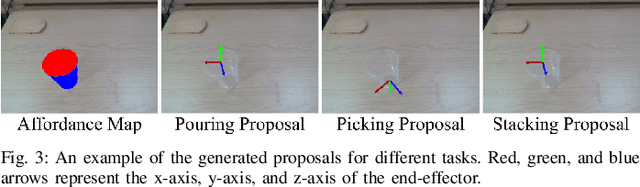

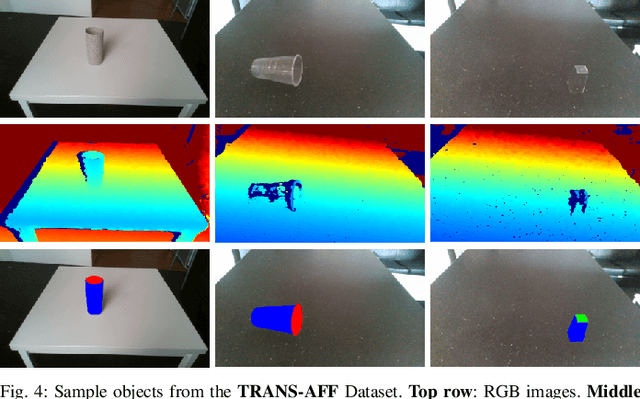

Transparent objects are widely used in our daily lives and therefore robots need to be able to handle them. However, transparent objects suffer from light reflection and refraction, which makes it challenging to obtain the accurate depth maps required to perform handling tasks. In this paper, we propose a novel affordance-based framework for depth reconstruction and manipulation of transparent objects, named A4T. A hierarchical AffordanceNet is first used to detect the transparent objects and their associated affordances that encode the relative positions of an object's different parts. Then, given the predicted affordance map, a multi-step depth reconstruction method is used to progressively reconstruct the depth maps of transparent objects. Finally, the reconstructed depth maps are employed for the affordance-based manipulation of transparent objects. To evaluate our proposed method, we construct a real-world dataset TRANS-AFF with affordances and depth maps of transparent objects, which is the first of its kind. Extensive experiments show that our proposed methods can predict accurate affordance maps, and significantly improve the depth reconstruction of transparent objects compared to the state-of-the-art method, with the Root Mean Squared Error in meters significantly decreased from 0.097 to 0.042. Furthermore, we demonstrate the effectiveness of our proposed method with a series of robotic manipulation experiments on transparent objects. See supplementary video and results at https://sites.google.com/view/affordance4trans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge