A Wireless-Vision Dataset for Privacy Preserving Human Activity Recognition

Paper and Code

May 24, 2022

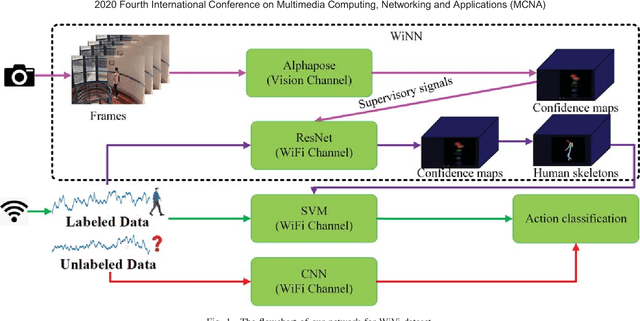

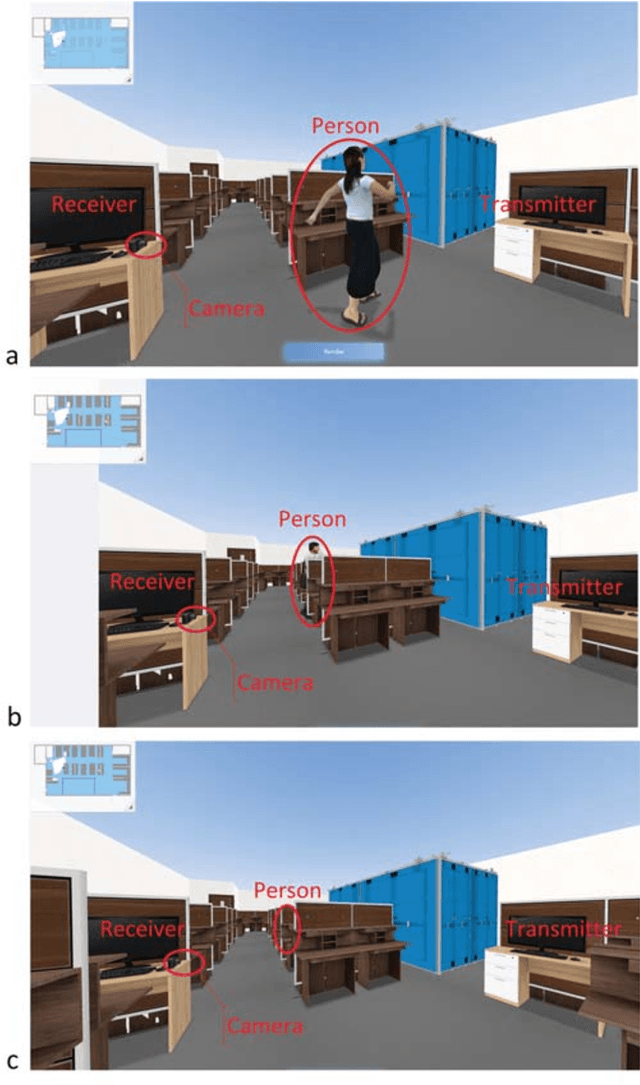

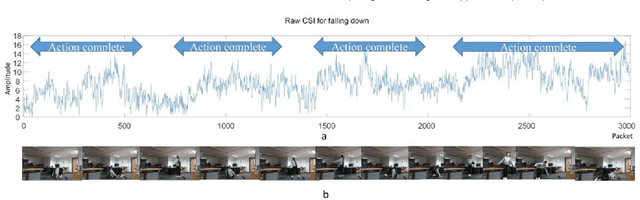

Human Activity Recognition (HAR) has recently received remarkable attention in numerous applications such as assisted living and remote monitoring. Existing solutions based on sensors and vision technologies have obtained achievements but still suffering from considerable limitations in the environmental requirement. Wireless signals like WiFi-based sensing have emerged as a new paradigm since it is convenient and not restricted in the environment. In this paper, a new WiFi-based and video-based neural network (WiNN) is proposed to improve the robustness of activity recognition where the synchronized video serves as the supplement for the wireless data. Moreover, a wireless-vision benchmark (WiVi) is collected for 9 class actions recognition in three different visual conditions, including the scenes without occlusion, with partial occlusion, and with full occlusion. Both machine learning methods - support vector machine (SVM) as well as deep learning methods are used for the accuracy verification of the data set. Our results show that WiVi data set satisfies the primary demand and all three branches in the proposed pipeline keep more than $80\%$ of activity recognition accuracy over multiple action segmentation from 1s to 3s. In particular, WiNN is the most robust method in terms of all the actions on three action segmentation compared to the others.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge