A Two-stream Neural Network for Pose-based Hand Gesture Recognition

Paper and Code

Jan 22, 2021

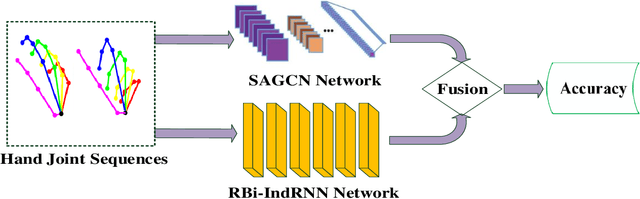

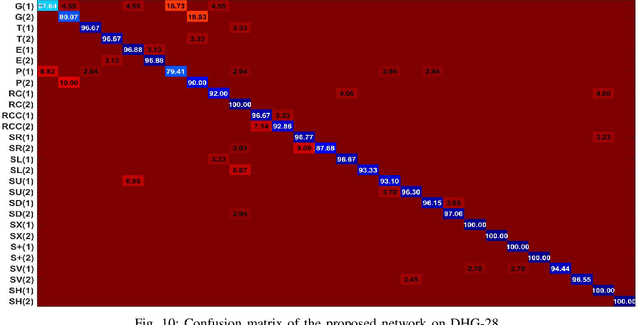

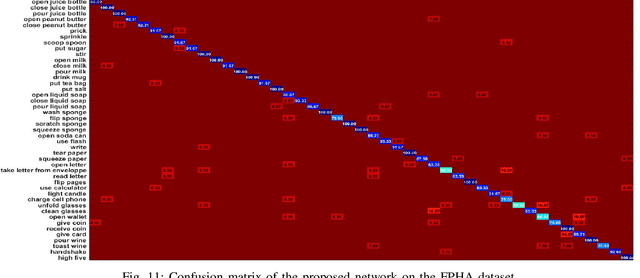

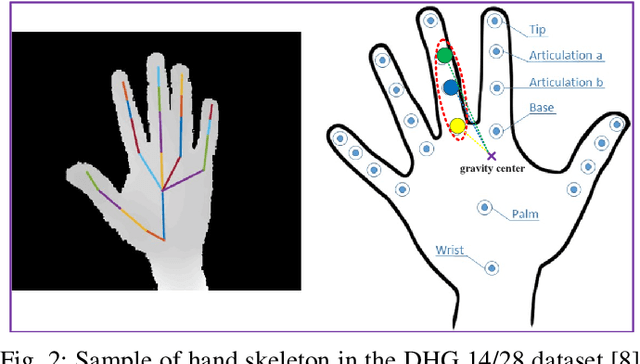

Pose based hand gesture recognition has been widely studied in the recent years. Compared with full body action recognition, hand gesture involves joints that are more spatially closely distributed with stronger collaboration. This nature requires a different approach from action recognition to capturing the complex spatial features. Many gesture categories, such as "Grab" and "Pinch", have very similar motion or temporal patterns posing a challenge on temporal processing. To address these challenges, this paper proposes a two-stream neural network with one stream being a self-attention based graph convolutional network (SAGCN) extracting the short-term temporal information and hierarchical spatial information, and the other being a residual-connection enhanced bidirectional Independently Recurrent Neural Network (RBi-IndRNN) for extracting long-term temporal information. The self-attention based graph convolutional network has a dynamic self-attention mechanism to adaptively exploit the relationships of all hand joints in addition to the fixed topology and local feature extraction in the GCN. On the other hand, the residual-connection enhanced Bi-IndRNN extends an IndRNN with the capability of bidirectional processing for temporal modelling. The two streams are fused together for recognition. The Dynamic Hand Gesture dataset and First-Person Hand Action dataset are used to validate its effectiveness, and our method achieves state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge