A Systematic Literature Review on Federated Learning: From A Model Quality Perspective

Paper and Code

Dec 01, 2020

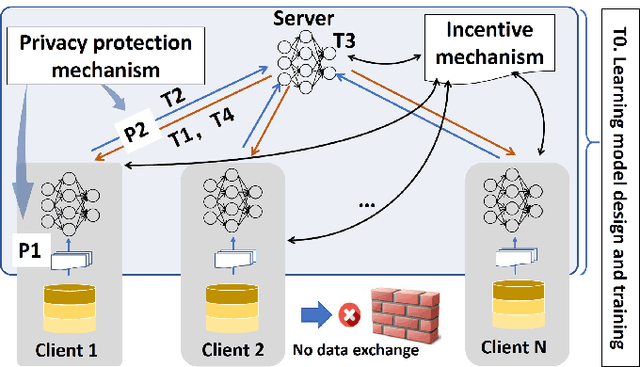

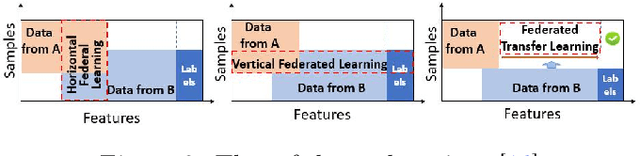

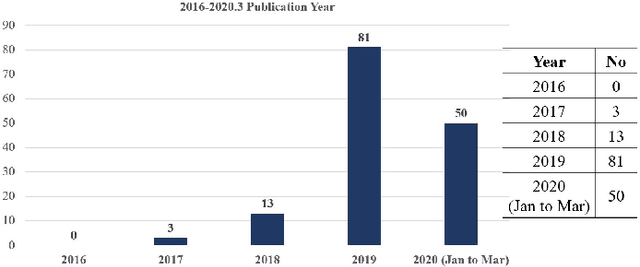

As an emerging technique, Federated Learning (FL) can jointly train a global model with the data remaining locally, which effectively solves the problem of data privacy protection through the encryption mechanism. The clients train their local model, and the server aggregates models until convergence. In this process, the server uses an incentive mechanism to encourage clients to contribute high-quality and large-volume data to improve the global model. Although some works have applied FL to the Internet of Things (IoT), medicine, manufacturing, etc., the application of FL is still in its infancy, and many related issues need to be solved. Improving the quality of FL models is one of the current research hotspots and challenging tasks. This paper systematically reviews and objectively analyzes the approaches to improving the quality of FL models. We are also interested in the research and application trends of FL and the effect comparison between FL and non-FL because the practitioners usually worry that achieving privacy protection needs compromising learning quality. We use a systematic review method to analyze 147 latest articles related to FL. This review provides useful information and insights to both academia and practitioners from the industry. We investigate research questions about academic research and industrial application trends of FL, essential factors affecting the quality of FL models, and compare FL and non-FL algorithms in terms of learning quality. Based on our review's conclusion, we give some suggestions for improving the FL model quality. Finally, we propose an FL application framework for practitioners.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge