A Systematic Evaluation and Benchmark for Embedding-Aware Generative Models: Features, Models, and Any-shot Scenarios

Paper and Code

Feb 16, 2023

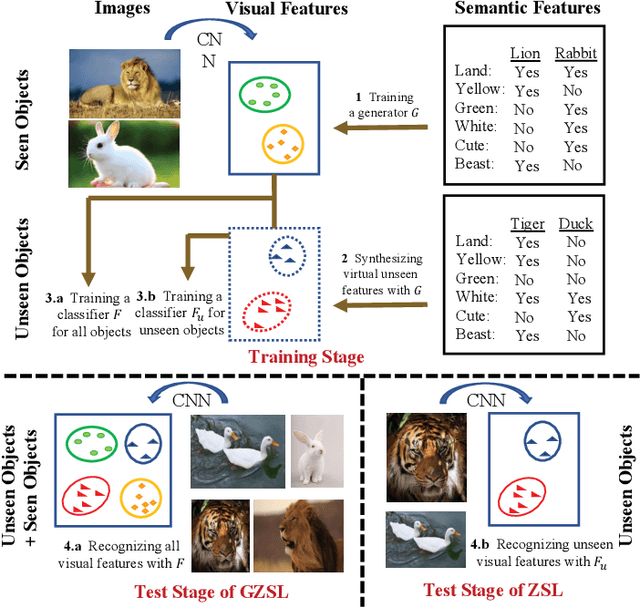

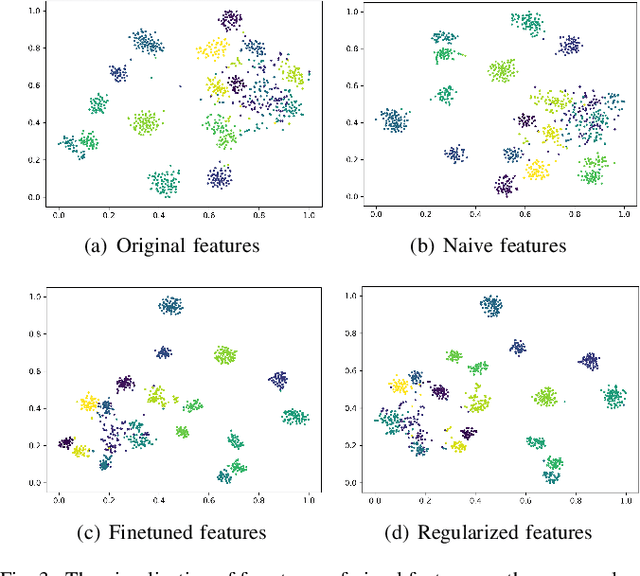

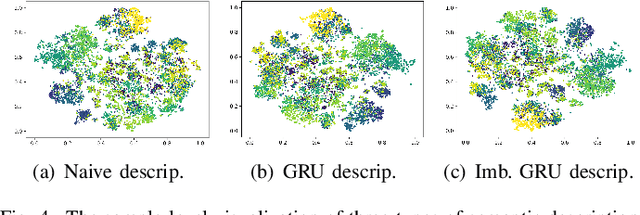

Embedding-aware generative model (EAGM) addresses the data insufficiency problem for zero-shot learning (ZSL) by constructing a generator between semantic and visual feature spaces. Thanks to the predefined benchmark and protocols, the number of proposed EAGMs for ZSL is increasing rapidly. We argue that it is time to take a step back and reconsider the embedding-aware generative paradigm. The main work of this paper is two-fold. First, the embedding features in benchmark datasets are somehow overlooked, which potentially limits the performance of EAGMs, while most researchers focus on how to improve EAGMs. Therefore, we conduct a systematic evaluation of ten representative EAGMs and prove that even embarrassedly simple modifications on the embedding features can improve the performance of EAGMs for ZSL remarkably. So it's time to pay more attention to the current embedding features in benchmark datasets. Second, based on five benchmark datasets, each with six any-shot learning scenarios, we systematically compare the performance of ten typical EAGMs for the first time, and we give a strong baseline for zero-shot learning (ZSL) and few-shot learning (FSL). Meanwhile, a comprehensive generative model repository, namely, generative any-shot learning (GASL) repository, is provided, which contains the models, features, parameters, and scenarios of EAGMs for ZSL and FSL. Any results in this paper can be readily reproduced with only one command line based on GASL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge