A Study on Passage Re-ranking in Embedding based Unsupervised Semantic Search

Paper and Code

May 28, 2018

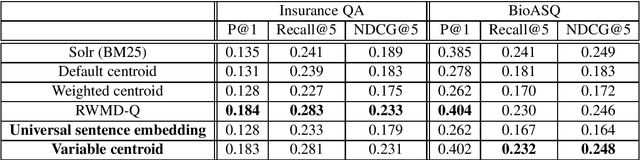

State of the art approaches for (embedding based) unsupervised semantic search exploits either compositional similarity (of a query and a passage) or pair-wise word (or term) similarity (from the query and the passage). By design, word based approaches do not incorporate similarity in the larger context (query/passage), while compositional similarity based approaches are usually unable to take advantage of the most important cues in the context. In this paper we propose a new compositional similarity based approach, called variable centroid vector (VCVB), that tries to address both of these limitations. We also presents results using a different type of compositional similarity based approach by exploiting universal sentence embedding. We provide empirical evaluation on two different benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge