A Structured Prediction Approach for Conditional Meta-Learning

Paper and Code

Feb 20, 2020

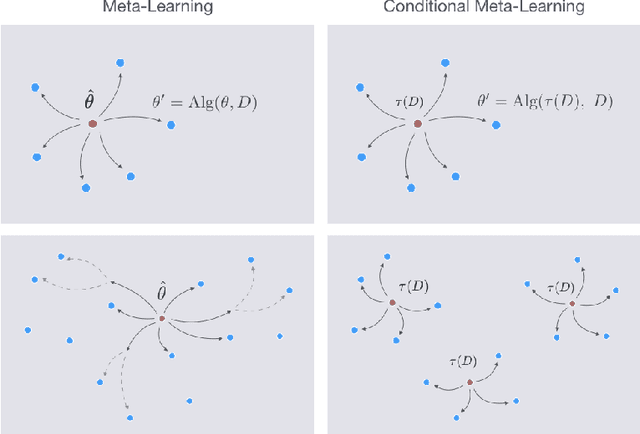

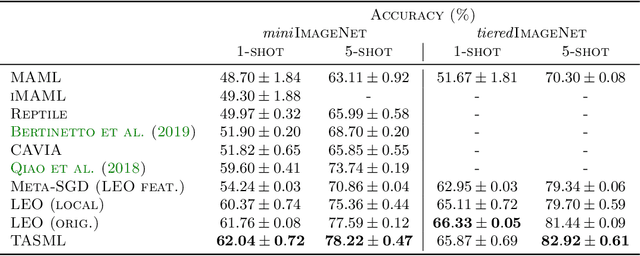

Optimization-based meta-learning algorithms are a powerful class of methods for learning-to-learn applications such as few-shot learning. They tackle the limited availability of training data by leveraging the experience gained from previously observed tasks. However, when the complexity of the tasks distribution cannot be captured by a single set of shared meta-parameters, existing methods may fail to fully adapt to a target task. We address this issue with a novel perspective on conditional meta-learning based on structured prediction. We propose task-adaptive structured meta-learning (TASML), a principled estimator that weighs meta-training data conditioned on the target task to design tailored meta-learning objectives. In addition, we introduce algorithmic improvements to tackle key computational limitations of existing methods. Experimentally, we show that TASML outperforms state-of-the-art methods on benchmark datasets both in terms of accuracy and efficiency. An ablation study quantifies the individual contribution of model components and suggests useful practices for meta-learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge