A Secure Federated Data-Driven Evolutionary Multi-objective Optimization Algorithm

Paper and Code

Oct 15, 2022

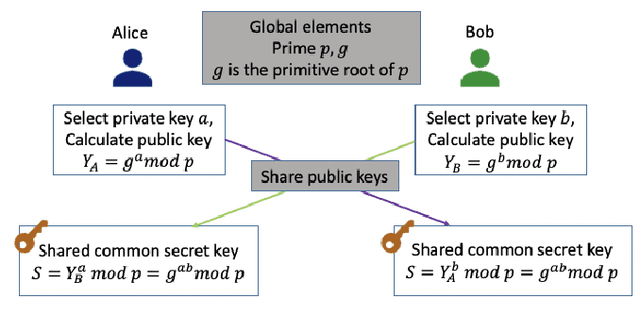

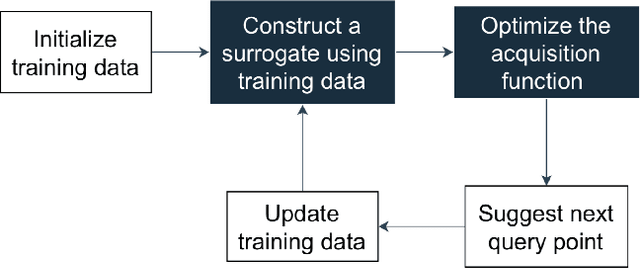

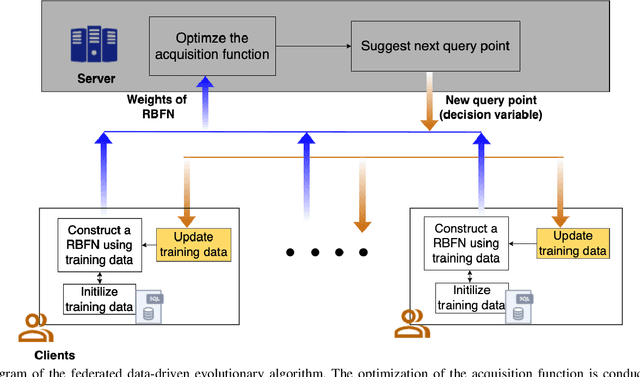

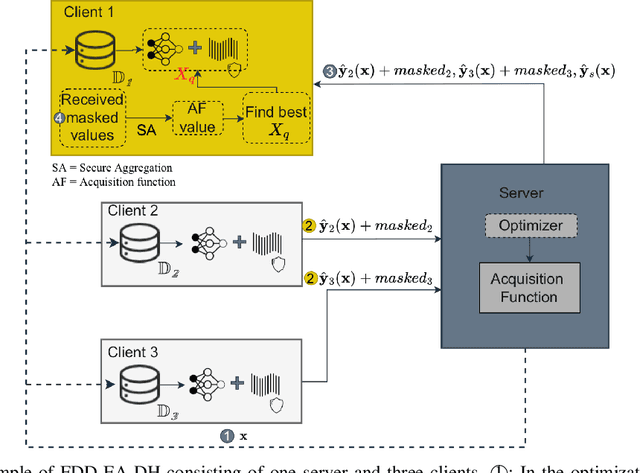

Data-driven evolutionary algorithms usually aim to exploit the information behind a limited amount of data to perform optimization, which have proved to be successful in solving many complex real-world optimization problems. However, most data-driven evolutionary algorithms are centralized, causing privacy and security concerns. Existing federated Bayesian algorithms and data-driven evolutionary algorithms mainly protect the raw data on each client. To address this issue, this paper proposes a secure federated data-driven evolutionary multi-objective optimization algorithm to protect both the raw data and the newly infilled solutions obtained by optimizing the acquisition function conducted on the server. We select the query points on a randomly selected client at each round of surrogate update by calculating the acquisition function values of the unobserved points on this client, thereby reducing the risk of leaking the information about the solution to be sampled. In addition, since the predicted objective values of each client may contain sensitive information, we mask the objective values with Diffie-Hellmann-based noise, and then send only the masked objective values of other clients to the selected client via the server. Since the calculation of the acquisition function also requires both the predicted objective value and the uncertainty of the prediction, the predicted mean objective and uncertainty are normalized to reduce the influence of noise. Experimental results on a set of widely used multi-objective optimization benchmarks show that the proposed algorithm can protect privacy and enhance security with only negligible sacrifice in the performance of federated data-driven evolutionary optimization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge