A Robust Non-Linear and Feature-Selection Image Fusion Theory

Paper and Code

Dec 23, 2019

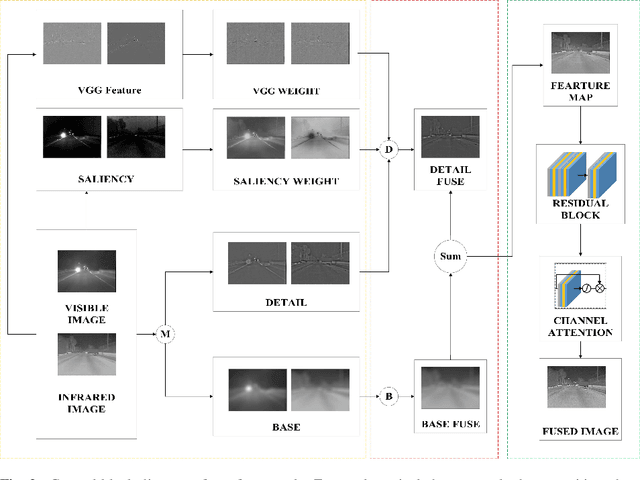

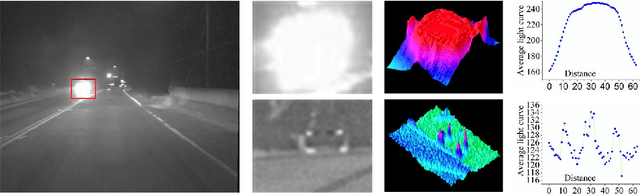

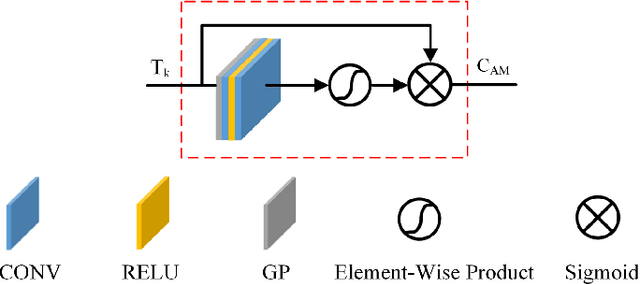

The human visual perception system has strong robustness in image fusion. This robustness is based on human visual perception system's characteristics of feature selection and non-linear fusion of different features. In order to simulate the human visual perception mechanism in image fusion tasks, we propose a multi-source image fusion framework that combines illuminance factors and attention mechanisms. The framework effectively combines traditional image features and modern deep learning features. First, we perform multi-scale decomposition of multi-source images. Then, the visual saliency map and the deep feature map are combined with the illuminance fusion factor to perform high-low frequency nonlinear fusion. Secondly, the characteristics of high and low frequency fusion are selected through the channel attention network to obtain the final fusion map. By simulating the nonlinear characteristics and selection characteristics of the human visual perception system in image fusion, the fused image is more in line with the human visual perception mechanism. Finally, we validate our fusion framework on public datasets of infrared and visible images, medical images and multi-focus images. The experimental results demonstrate the superiority of our fusion framework over state-of-arts in visual quality, objective fusion metrics and robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge