A Remark on Concept Drift for Dependent Data

Paper and Code

Dec 15, 2023

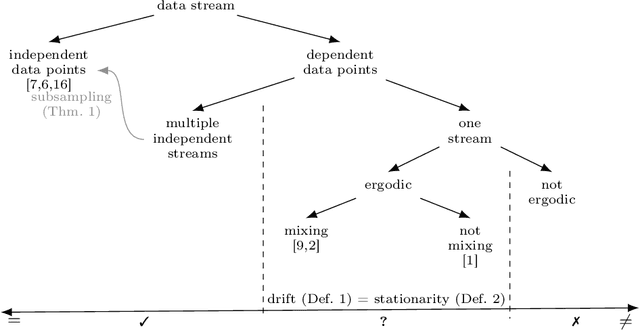

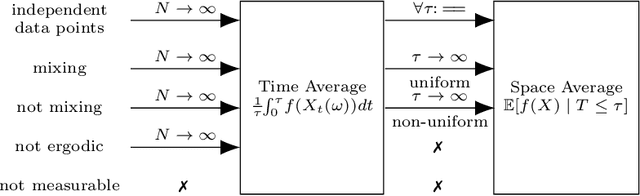

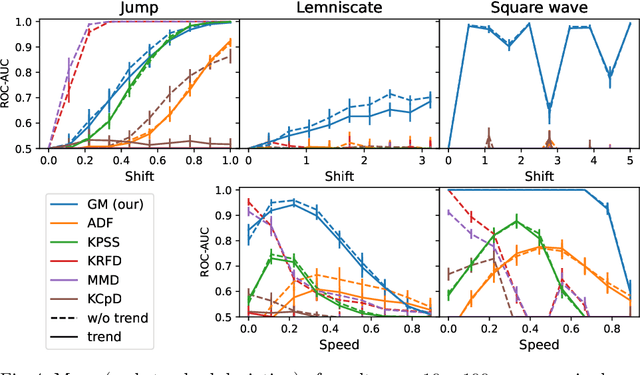

Concept drift, i.e., the change of the data generating distribution, can render machine learning models inaccurate. Several works address the phenomenon of concept drift in the streaming context usually assuming that consecutive data points are independent of each other. To generalize to dependent data, many authors link the notion of concept drift to time series. In this work, we show that the temporal dependencies are strongly influencing the sampling process. Thus, the used definitions need major modifications. In particular, we show that the notion of stationarity is not suited for this setup and discuss alternatives. We demonstrate that these alternative formal notions describe the observable learning behavior in numerical experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge