A Principled Method for the Creation of Synthetic Multi-fidelity Data Sets

Paper and Code

Aug 26, 2022

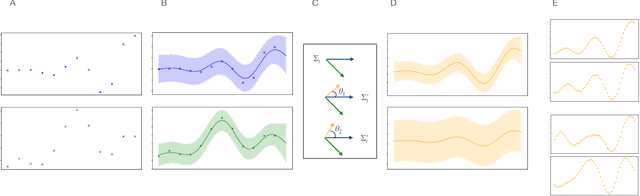

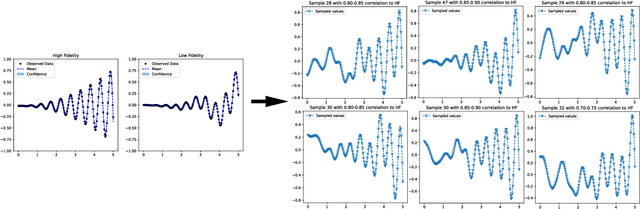

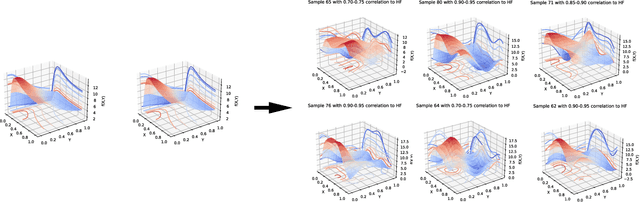

Multifidelity and multioutput optimisation algorithms are of active interest in many areas of computational design as they allow cheaper computational proxies to be used intelligently to aid experimental searches for high-performing species. Characterisation of these algorithms involves benchmarks that typically either use analytic functions or existing multifidelity datasets. However, analytic functions are often not representative of relevant problems, while preexisting datasets do not allow systematic investigation of the influence of characteristics of the lower fidelity proxies. To bridge this gap, we present a methodology for systematic generation of synthetic fidelities derived from preexisting datasets. This allows for the construction of benchmarks that are both representative of practical optimisation problems while also allowing systematic investigation of the influence of the lower fidelity proxies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge