A Neural Network Based On-device Learning Anomaly Detector for Edge Devices

Paper and Code

Aug 28, 2019

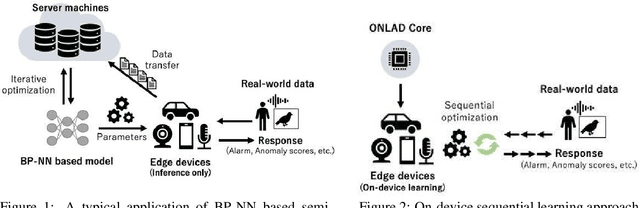

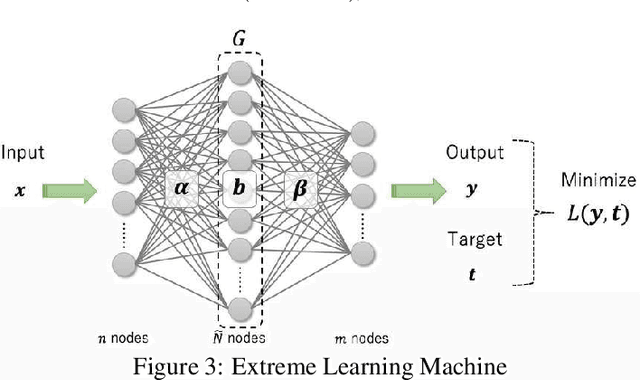

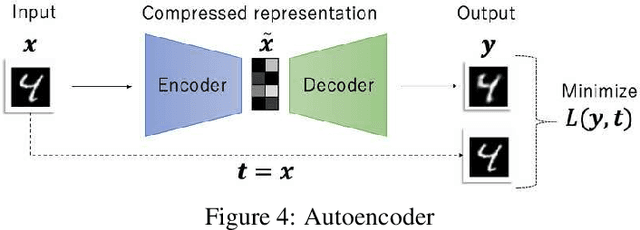

Semi-supervised anomaly detection is referred as an approach to identify rare data instances (i.e, anomalies) on the assumption that all the available training data belong to the majority (i.e., the normal class). A typical strategy is to model the distribution of normal data, then identify data samples far from the distribution as anomalies. Nowadays, backpropagation based neural networks (i.e., BP-NNs) have been drawing attention as well as in the field of semi-supervised anomaly detection because of their high generalization capability for real-world high dimensional data. As a typical application, such BP-NN based models are iteratively optimized in server machines with accumulated data gathered from edge devices. However, there are two issues in this framework: (1) BP-NNs' iterative optimization approach often takes too long time to follow changes of the distribution of normal data (i.e., concept drift), and (2) data transfers between servers and edge devices have a potential risk to cause data breaches. To address these underlying issues, we propose an ON-device sequential Learning semi-supervised Anomaly Detector called ONLAD. The aim of this work is to propose the algorithm, and also to implement it as an IP core called ONLAD Core so that various kinds of edge devices can adopt our approach at low power consumption. Experimental results using open datasets show that ONLAD has favorable anomaly detection capability especially in a testbed which simulates concept drift. Experimental results on hardware performance of the FPGA based ONLAD Core show that its training latency and prediction latency are x1.95 - x4.51 and x2.29 - x4.73 faster than those of BP-NN based software implementations. It is also confirmed that our on-board implementation of ONLAD Core actually works at x6.7 - x27.1 lower power consumption than the other software implementations at a high workload.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge