A Modified Drake Equation for Assessing Adversarial Risk to Machine Learning Models

Paper and Code

Mar 03, 2021

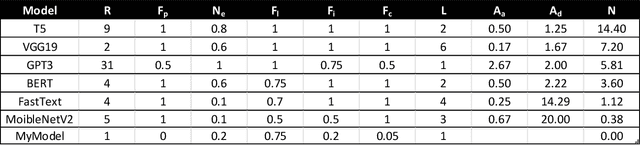

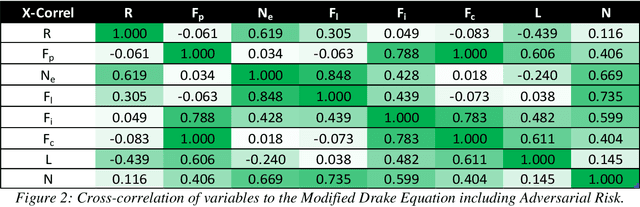

Each machine learning model deployed into production has a risk of adversarial attack. Quantifying the contributing factors and uncertainties using empirical measures could assist the industry with assessing the risk of downloading and deploying common machine learning model types. The Drake Equation is famously used for parameterizing uncertainties and estimating the number of radio-capable extra-terrestrial civilizations. This work proposes modifying the traditional Drake Equation's formalism to estimate the number of potentially successful adversarial attacks on a deployed model. While previous work has outlined methods for discovering vulnerabilities in public model architectures, the proposed equation seeks to provide a semi-quantitative benchmark for evaluating the potential risk factors of adversarial attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge