A MIMO Radar-based Few-Shot Learning Approach for Human-ID

Paper and Code

Oct 16, 2021

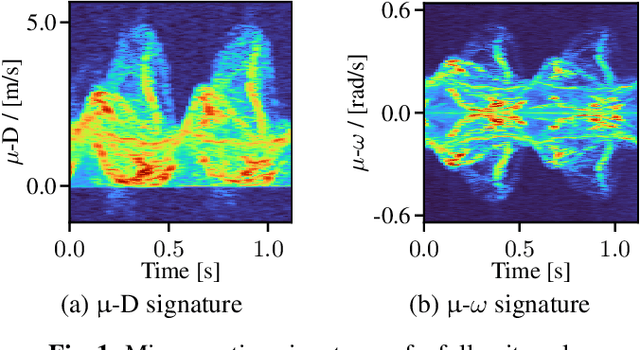

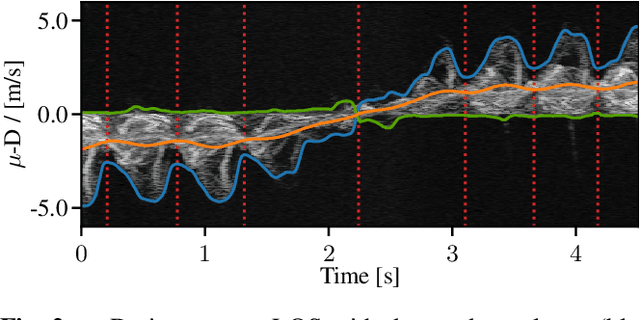

Radar for deep learning-based human identification has become a research area of increasing interest. It has been shown that micro-Doppler (\(\upmu\)-D) can reflect the walking behavior through capturing the periodic limbs' micro-motions. One of the main aspects is maximizing the number of included classes while considering the real-time and training dataset size constraints. In this paper, a multiple-input-multiple-output (MIMO) radar is used to formulate micro-motion spectrograms of the elevation angular velocity (\(\upmu\)-\(\omega\)). The effectiveness of concatenating this newly-formulated spectrogram with the commonly used \(\upmu\)-D is investigated. To accommodate for non-constrained real walking motion, an adaptive cycle segmentation framework is utilized and a metric learning network is trained on half gait cycles (\(\approx\) 0.5 s). Studies on the effects of various numbers of classes (5--20), different dataset sizes, and varying observation time windows 1--2 s are conducted. A non-constrained walking dataset of 22 subjects is collected with different aspect angles with respect to the radar. The proposed few-shot learning (FSL) approach achieves a classification error of 11.3 % with only 2 min of training data per subject.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge