A method to segment maps from different modalities using free space layout - MAORIS : MAp Of RIpples Segmentation

Paper and Code

Sep 28, 2017

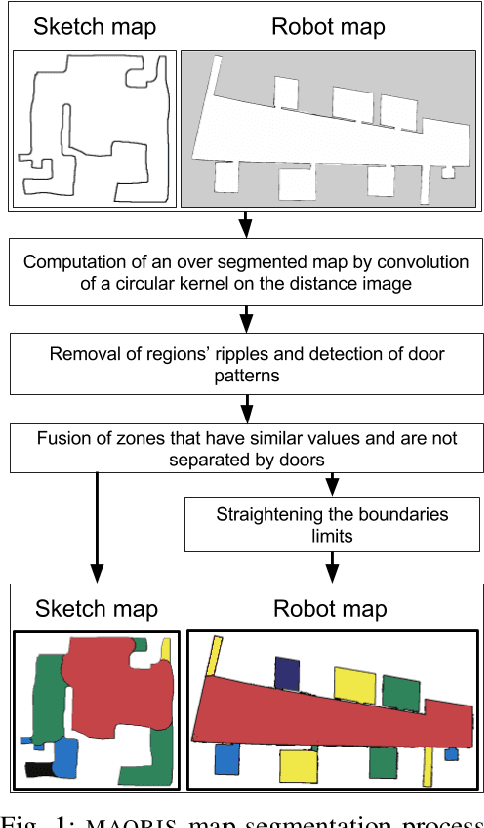

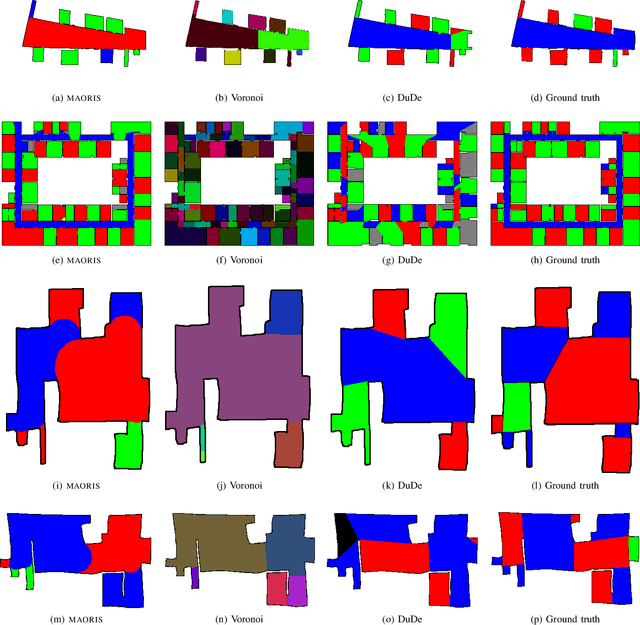

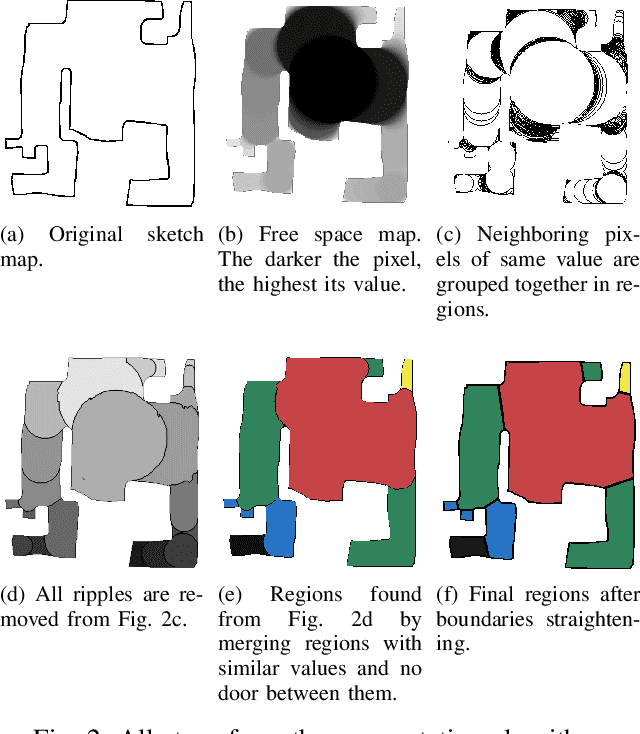

How to divide floor plans or navigation maps into a semantic representation, such as rooms and corridors, is an important research question in fields such as human-robot interaction, place categorization, or semantic mapping. While most algorithms focus on segmenting robot built maps, those are not the only types of map a robot, or its user, can use. We have developed a method for segmenting maps from different modalities, focusing on robot built maps and hand-drawn sketch maps, and show better results than state of the art for both types. Our method segments the map by doing a convolution between the distance image of the map and a circular kernel, and grouping pixels of the same value. Segmentation is done by detecting ripple like patterns where pixel values vary quickly, and merging neighboring regions with similar values. We identify a flaw in segmentation evaluation metric used in recent works and propose a more consistent metric. We compare our results to ground-truth segmentations of maps from a publicly available dataset, on which we obtain a better Matthews correlation coefficient (MCC) than state of the art with 0.98 compared to 0.85 for a recent Voronoi-based segmentation method and 0.78 for the DuDe segmentation method. We also provide a dataset of sketches of an indoor environment, with two possible sets of ground truth segmentations, on which our method obtains a MCC of 0.82 against 0.40 for the Voronoi-based segmentation method and 0.45 for DuDe.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge