A Learning Based Framework for Handling Uncertain Lead Times in Multi-Product Inventory Management

Paper and Code

Mar 09, 2022

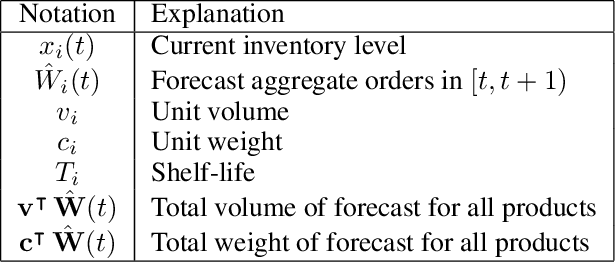

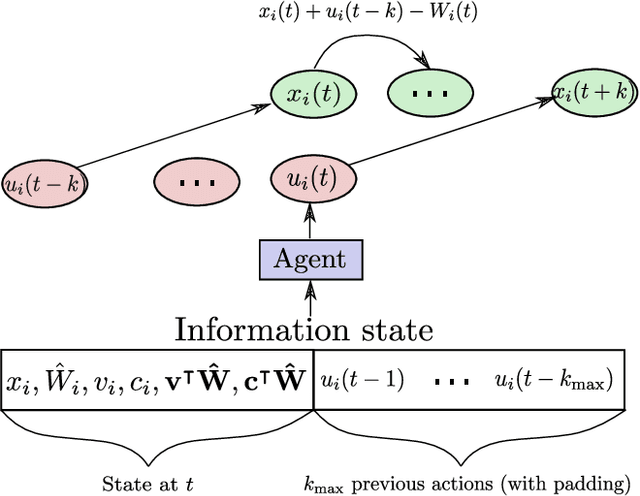

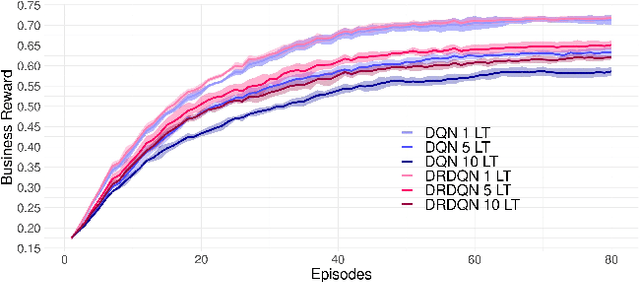

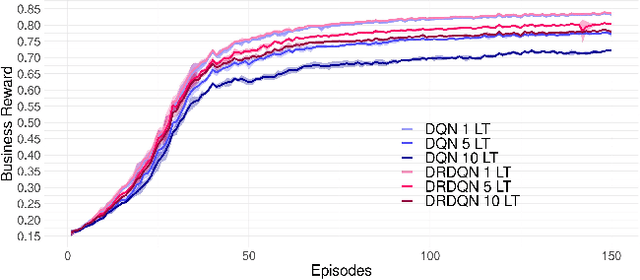

Most existing literature on supply chain and inventory management consider stochastic demand processes with zero or constant lead times. While it is true that in certain niche scenarios, uncertainty in lead times can be ignored, most real-world scenarios exhibit stochasticity in lead times. These random fluctuations can be caused due to uncertainty in arrival of raw materials at the manufacturer's end, delay in transportation, an unforeseen surge in demands, and switching to a different vendor, to name a few. Stochasticity in lead times is known to severely degrade the performance in an inventory management system, and it is only fair to abridge this gap in supply chain system through a principled approach. Motivated by the recently introduced delay-resolved deep Q-learning (DRDQN) algorithm, this paper develops a reinforcement learning based paradigm for handling uncertainty in lead times (\emph{action delay}). Through empirical evaluations, it is further shown that the inventory management with uncertain lead times is not only equivalent to that of delay in information sharing across multiple echelons (\emph{observation delay}), a model trained to handle one kind of delay is capable to handle delays of another kind without requiring to be retrained. Finally, we apply the delay-resolved framework to scenarios comprising of multiple products subjected to stochasticity in lead times, and elucidate how the delay-resolved framework negates the effect of any delay to achieve near-optimal performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge