A Latent Clothing Attribute Approach for Human Pose Estimation

Paper and Code

Nov 16, 2014

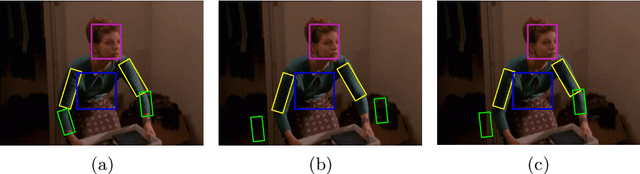

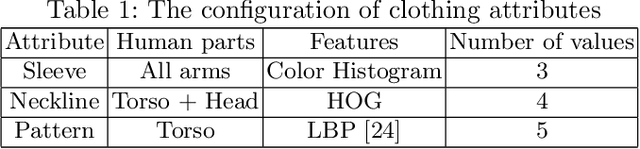

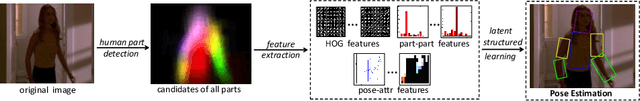

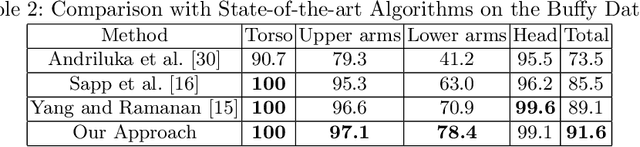

As a fundamental technique that concerns several vision tasks such as image parsing, action recognition and clothing retrieval, human pose estimation (HPE) has been extensively investigated in recent years. To achieve accurate and reliable estimation of the human pose, it is well-recognized that the clothing attributes are useful and should be utilized properly. Most previous approaches, however, require to manually annotate the clothing attributes and are therefore very costly. In this paper, we shall propose and explore a \emph{latent} clothing attribute approach for HPE. Unlike previous approaches, our approach models the clothing attributes as latent variables and thus requires no explicit labeling for the clothing attributes. The inference of the latent variables are accomplished by utilizing the framework of latent structured support vector machines (LSSVM). We employ the strategy of \emph{alternating direction} to train the LSSVM model: In each iteration, one kind of variables (e.g., human pose or clothing attribute) are fixed and the others are optimized. Our extensive experiments on two real-world benchmarks show the state-of-the-art performance of our proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge