A Framework of Combining Short-Term Spatial/Frequency Feature Extraction and Long-Term IndRNN for Activity Recognition

Paper and Code

Nov 06, 2020

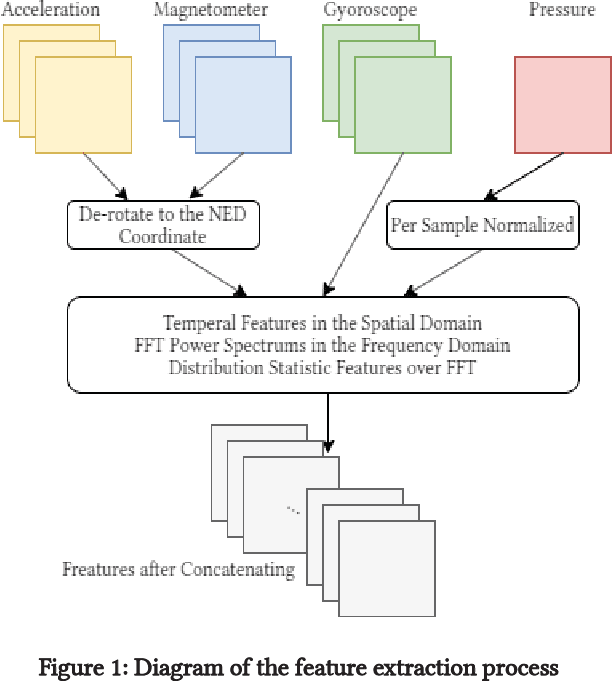

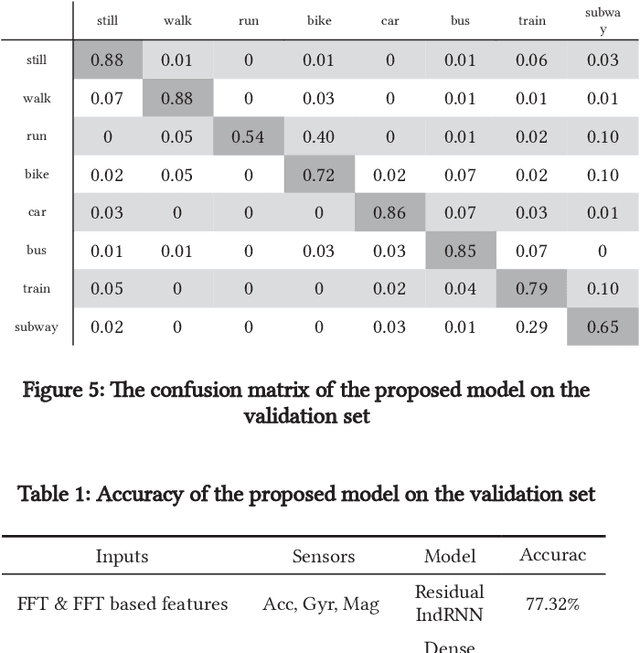

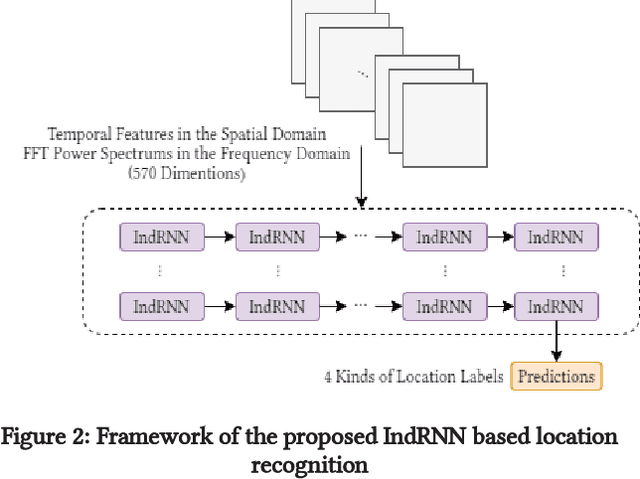

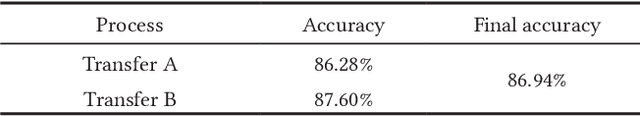

Smartphone sensors based human activity recognition is attracting increasing interests nowadays with the popularization of smartphones. With the high sampling rates of smartphone sensors, it is a highly long-range temporal recognition problem, especially with the large intra-class distances such as the smartphones carried at different locations such as in the bag or on the body, and the small inter-class distances such as taking train or subway. To address this problem, we propose a new framework of combining short-term spatial/frequency feature extraction and a long-term Independently Recurrent Neural Network (IndRNN) for activity recognition. Considering the periodic characteristics of the sensor data, short-term temporal features are first extracted in the spatial and frequency domains. Then the IndRNN, which is able to capture long-term patterns, is used to further obtain the long-term features for classification. In view of the large differences when the smartphone is carried at different locations, a group based location recognition is first developed to pinpoint the location of the smartphone. The Sussex-Huawei Locomotion (SHL) dataset from the SHL Challenge is used for evaluation. An earlier version of the proposed method has won the second place award in the SHL Challenge 2020 (the first place if not considering multiple models fusion approach). The proposed method is further improved in this paper and achieves 80.72$\%$ accuracy, better than the existing methods using a single model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge