A Flexible Generative Framework for Graph-based Semi-supervised Learning

Paper and Code

May 26, 2019

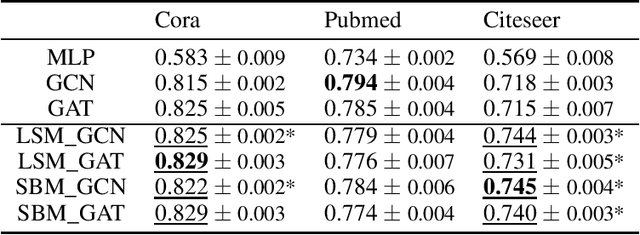

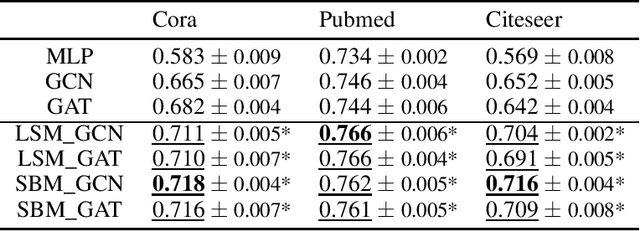

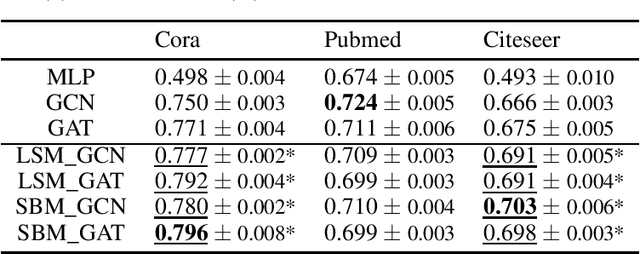

We consider a family of problems that are concerned about making predictions for the majority of unlabeled, graph-structured data samples based on a small proportion of labeled examples. Relational information among the data samples, often encoded in the graph or network structure, is shown to be helpful for these semi-supervised learning tasks. Conventional graph-based regularization methods and recent graph neural networks do not fully leverage the interrelations between the features, the graph, and the labels. We propose a flexible generative framework for graph-based semi-supervised learning, which approaches the joint distribution of the node features, labels, and the graph structure. Borrowing insights from random graph models in network science literature, this joint distribution can be instantiated using various distribution families. For the inference of missing labels, we exploit recent advances of scalable variational inference techniques to approximate the Bayesian posterior. We conduct thorough experiments on benchmark datasets for graph-based semi-supervised learning. Results show that the proposed methods outperform state-of-the-art models under most settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge