A Deep Multi-Agent Reinforcement Learning Approach to Autonomous Separation Assurance

Paper and Code

Mar 17, 2020

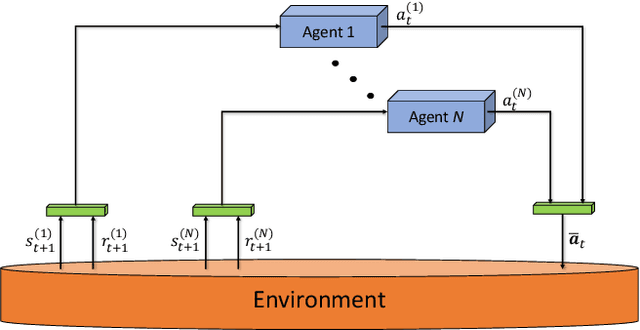

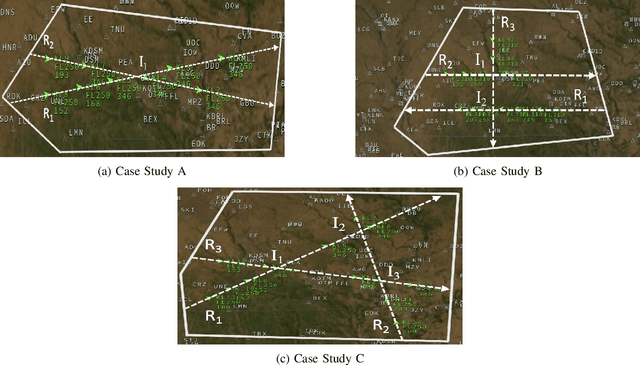

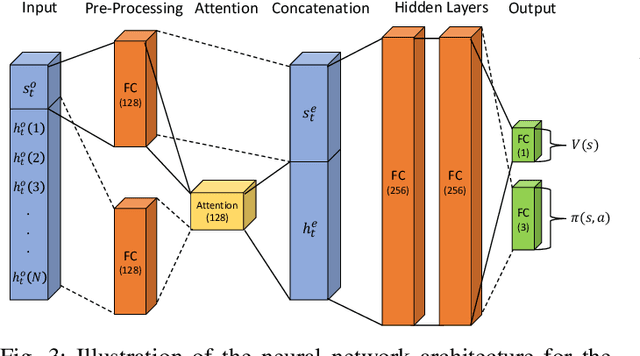

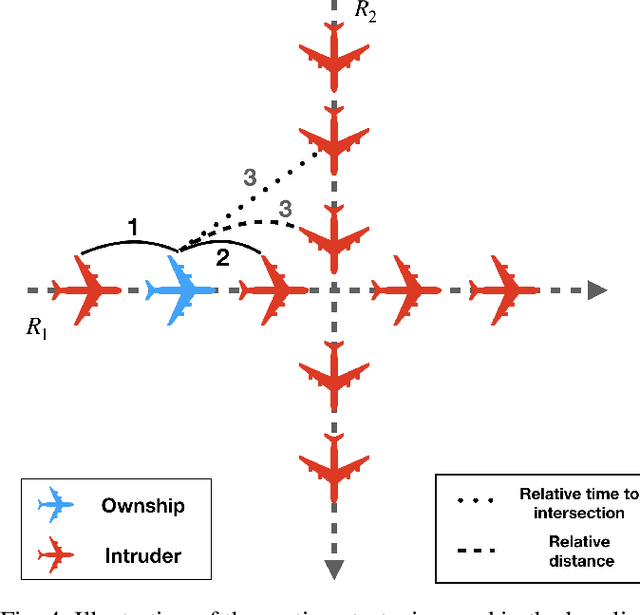

A novel deep multi-agent reinforcement learning framework is proposed to identify and resolve conflicts among a variable number of aircraft in a high-density, stochastic, and dynamic sector in en route airspace. Currently the sector capacity is limited by human air traffic controller's cognitive limitation. In order to scale up to a high-density airspace, in this work we investigate the feasibility of a new concept (autonomous separation assurance) and a new approach (multi-agent reinforcement learning) to push the sector capacity above human cognitive limitation. We propose the concept of using distributed vehicle autonomy to ensure separation, instead of a centralized sector air traffic controller. Our proposed framework utilizes an actor-critic model, Proximal Policy Optimization (PPO) that we customize to incorporate an attention network. By using the attention network, we are able to encode the information from a variable number of intruder aircraft into a fixed length vector and allow the agents to learn which intruder aircraft's information is critical to achieve the optimal performance. This allows the agents to have access to variable aircraft information in the sector in a scalable, efficient approach to achieve high traffic throughput under uncertainty. The agents are trained using a centralized learning, decentralized execution scheme where one neural network is learned and shared by all agents in the environment. To validate the proposed framework, we designed three challenging case studies in the BlueSky air traffic control environment. Numerical results show the proposed framework significantly reduces the offline training time without sacrificing performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge