A dataset for multi-sensor drone detection

Paper and Code

Nov 02, 2021

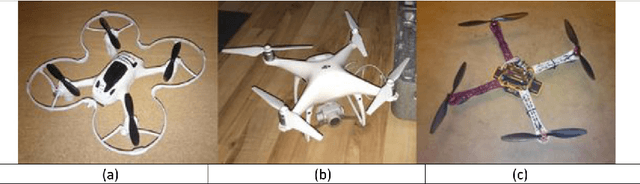

The use of small and remotely controlled unmanned aerial vehicles (UAVs), or drones, has increased in recent years. This goes in parallel with misuse episodes, with an evident threat to the safety of people or facilities. As a result, the detection of UAV has also emerged as a research topic. Most studies on drone detection fail to specify the type of acquisition device, the drone type, the detection range, or the dataset. The lack of proper UAV detection studies employing thermal infrared cameras is also an issue, despite its success with other targets. Besides, we have not found any previous study that addresses the detection task as a function of distance to the target. Sensor fusion is indicated as an open research issue as well, although research in this direction is scarce too. To counteract the mentioned issues and allow fundamental studies with a common public benchmark, we contribute with an annotated multi-sensor database for drone detection that includes infrared and visible videos and audio files. The database includes three different drones, of different sizes and other flying objects that can be mistakenly detected as drones, such as birds, airplanes or helicopters. In addition to using several different sensors, the number of classes is higher than in previous studies. To allow studies as a function of the sensor-to-target distance, the dataset is divided into three categories (Close, Medium, Distant) according to the industry-standard Detect, Recognize and Identify (DRI) requirements, built on the Johnson criteria. Given that the drones must be flown within visual range due to regulations, the largest sensor-to-target distance for a drone is 200 m, and acquisitions are made in daylight. The data has been obtained at three airports in Sweden: Halmstad Airport (IATA code: HAD/ICAO code: ESMT), Gothenburg City Airport (GSE/ESGP) and Malm\"o Airport (MMX/ESMS).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge